Enhancement of Jaibot: Developing Safety and Monitoring Features for Jaibot Using IoT Technologies

Published at : 31 Oct 2023

Volume : IJtech

Vol 14, No 6 (2023)

DOI : https://doi.org/10.14716/ijtech.v14i6.6627

Chan, J.H., Lau, C.Y., 2023. Enhancement of Jaibot: Developing Safety and Monitoring Features for Jaibot Using IoT Technologies. International Journal of Technology. Volume 14(6), pp. 1309-1319

| Jing Hung Chan | School of Engineering, Asia Pacific University of Technology and Innovation, 57000 Kuala Lumpur, Malaysia |

| Chee Yong Lau | School of Engineering, Asia Pacific University of Technology and Innovation, 57000 Kuala Lumpur, Malaysia |

The Hilti Jaibot, a state-of-the-art construction

site drilling robot, has demonstrated remarkable productivity gains while also

underscoring the need for improved safety and monitoring capabilities. This

study aims to address this need by harnessing Internet of Things (IoT)

technologies and predictive maintenance methodologies. The proposed

enhancements encompass a comprehensive sensor and camera integration to monitor

the robot's environment, coupled with the development of a Long Short-Term

Memory (LSTM) predictive maintenance algorithm to preemptively identify

operational issues. These improvements enable the Jaibot to autonomously detect

and mitigate risks, such as obstacles and human activity, while providing

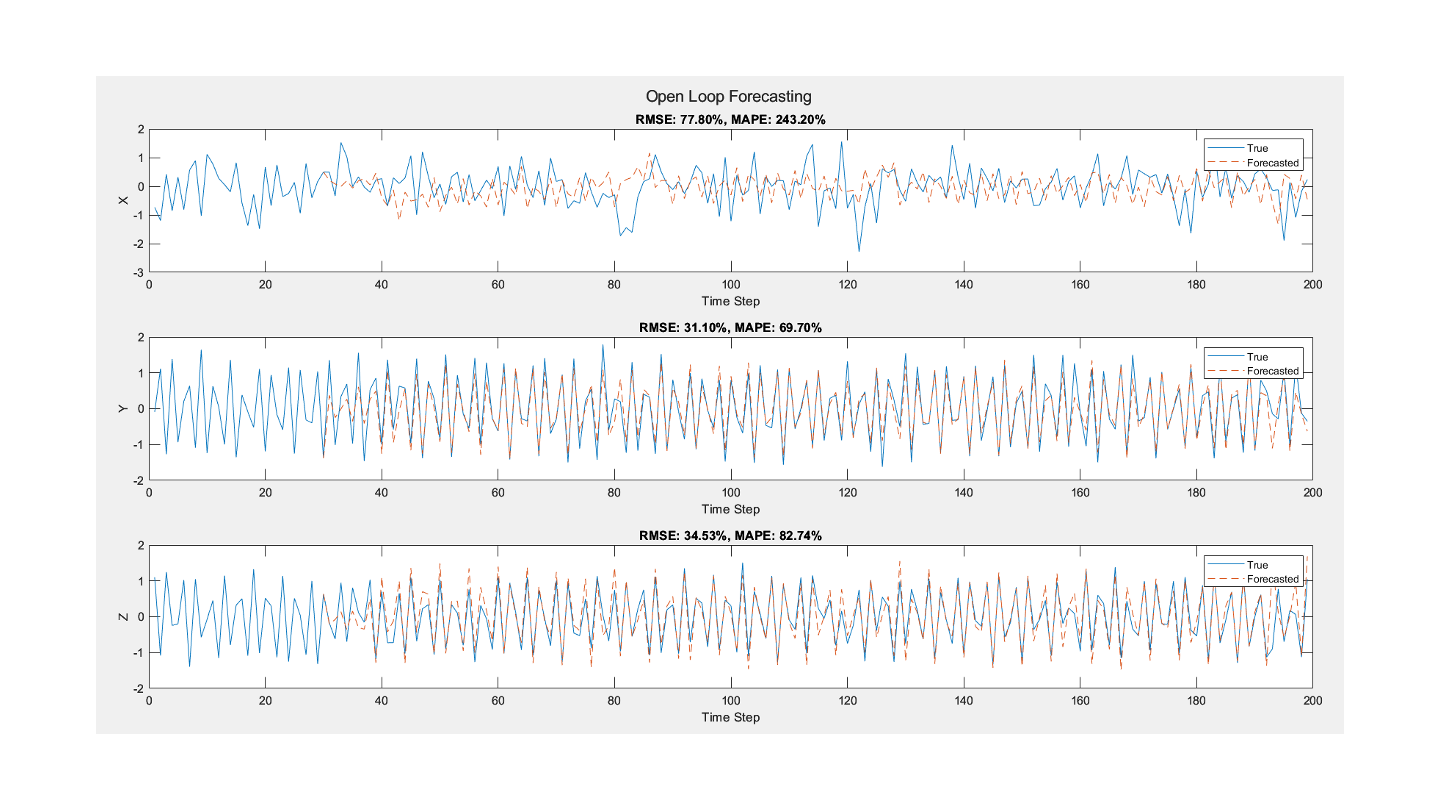

real-time safety alerts to operators. Incorporating quantitative results from

our predictive model, which successfully predicts three output variables (X, Y,

and Z) using three input variables, we observed varying RMSE and MAPE values.

Specifically, X exhibited an RMSE of 77.80% and a MAPE of 242.20%, while Y showed

an RMSE of 31.10% and a MAPE of 69.70%, and Z had an RMSE of 34.53% and a MAPE

of 82.74%. Notably, Y and Z data displayed high MAPE values, potentially

attributed to data inconsistency. To enhance accuracy in our predictive model,

we propose the utilization of more complex models and increased data volumes,

which may mitigate the observed inconsistencies and lead to improved overall

model performance. These findings from our quantitative analysis provide

valuable insights for the integration of predictive maintenance algorithms into

the Hilti Jaibot and lay the foundation for future advancements in robotic

construction, emphasizing the pivotal role of IoT technology and predictive

maintenance in shaping the industry's trajectory.

AWS; Digital Twin; Hilti Jaibot; IoT; LSTM Predictive Model

As the fourth industrial revolution, Industry 4.0

is progressing exponentially, and digital transformation and automation have

gradually become a common phenomenon around us. Digitization, automation, and

integration enhance productivity and improve the design and quality of the

construction (Rabbani and

Foo, 2022; Chong et al., 2022; Yet, Lau, Thang 2022; PwC, 2016).

However, the adoption of emerging technologies and automation within the

construction industry is fairly slow relative to other industries. This is because of the inability to embrace

technological advances relative to other industries, such as manufacturing and

automotive. (Ma, Mao, and Liu, 2022) found

that the complexity and decentralization of construction activities have

resulted in the construction industry lagging behind compared to streamlined industries. Not

only that, but the construction industry is also experiencing a skilled labor

shortage of engineers, consultants, and supervisors, which result in project

delays and eventually leads to a decrement in productivity and an increment in

cost on the construction site (Jonny, Kriswanto,

and Toshio, 2021).

In accordance with Hilti, the

link between digitalization and job site has been accomplished as they bring

digital solutions on-site and solve installation challenges by introducing the

Hilti Jaibot, a semi-autonomous drilling robot for overhead installation with

the aids of Building Information Model (BIM). The solution has helped

contractors adapt to the changing phase of modern construction and furthermore

brought value in terms of performance, innovation, and health and safety.

According to research, Hilti Jaibot has improved the overall drilling accuracy

by 50% and schedule reduction of 20% (Brosque and Fischer, 2022). This

marks a significant step for the construction industry in addressing major

challenges, including a shortage of skilled labor, stagnant productivity, and

health and safety issues.

While the Hilti Jaibot is currently a cutting-edge

construction robot, it's important to acknowledge that emerging technologies

can surpass it if we neglect to integrate new innovations. Meeting the growing

global demand for digital solutions with enhanced functionality and

accessibility is essential to avoid obsolescence. The introduction of IoT

technologies like Edge Computing, Big Data, and Digital Twin can boost

productivity by enhancing the existing product. By leveraging IoT, we can

optimize data and integrate machine learning and machine vision to enhance

Jaibot's capabilities.

2. Related Works

The presence of the

semi-autonomous Hilti Jaibot has addressed safety concerns and stagnant labor

productivity issues in construction activities (Lee et al., 2022). However, the Jaibot itself has not been implemented

with IoT to provide assistance with overhead installation on the site. Without

IoT support, the ability to move data over a network without the need for human

or human interaction with the computer is not available. Since there is no

involvement of IoT, the real-time information is unable to be ingested into the

cloud for further processing, such as progress monitoring, computation of

follow-up action, predictive maintenance, etc. (Thea,

Lau, and Lai, 2022; Shen, Lukose, and

Young, 2021). In

accordance with the statement above, the supervisor or project manager may find

it difficult to manage and monitor the progress and performance of the Jaibot

on the construction site. Based on research, with inefficient and inaccurate

progress monitoring, time and cost overruns may accounted for in construction

projects (Omar, Mahdjoubi, Kheder, 2018). In the long run, the excessive loss of time and

money may arise as a serious issue due to a lack of IoT technology

implementation and result in the shutdown of a project in worst case scenario.

Other than that, the

influence of Covid-19 on construction activities has resulted in the practice

of remote working to limit the spread of disease. According to (Chin et al., 2022; Fabiani et al., 2021), the most effective policy to address the well-known

need for worker distancing is to perform remote work. This makes the monitoring

of Jaibot even harder compared to pre-pandemic times, where robots and

equipment could be accessed by workers physically. The lack of remote

monitoring aspect has reduced the productivity of the construction activities

due to the social distancing policy on the site.

Over and above, due to its

semi-autonomous operating system, the handling of an operator is essential to

move the Jaibot from one point to another without any assistance from sensors.

This indicates that there is a possibility that the Jaibot will crash into

someone and result in minor casualties while moving on-site. From 2009 to 2019,

there were around 400 robot-related accident cases reported in Korea (Lee, Shin, and Lim, 2021). Additionally, based on research found, (Villani et al., 2018) write that: “Safety issues are

the primary main challenge that must be tackled by any approach implementing

collaboration between humans and robots.” Worker

safety is directly linked to site productivity since health and safety issues

can impact the workforce. Consequently, prioritizing workplace robot safety in

construction sites is crucial to prevent incidents that could pose risks to

project progress.

3. Research Problem

The shortcoming of the Jaibot has

presented to us as the connection between the cloud and Jaibot is insignificant

due to the lack of IoT. This results in data loss, which prevents the

processing of real-time information for better use cases such as data

analytics, data optimization, data monitoring, etc., that may improve the

efficiency and productivity of the robot. Implementation and integration of IoT

into the Jaibot help to promote the intelligence of the robot for better

performance, which accounted for one of the Hilti IoT long-term visions,

“Smartization,” to make the robot smarter in short. According to (Carvalho

and Soares, 2019), progress

monitoring is essential in construction management to reduce reworks and

errors. The statement made above has shown the necessity of a progress

monitoring feature on the Jaibot itself. Occurrences of overruns onsite in

terms of money and time can be resolved by collecting, analyzing, and

optimizing real-time data from the Jaibot. Additionally, the utilization of

data has promoted the development of predictive maintenance or remote

monitoring for improving the robot performance in terms of productivity and

efficiency.

Besides that, the unique, dynamic, and

complex nature of construction projects is likely to increase worker exposure

to hazardous workplaces (Paneru

and Jeelani, 2021). Not to

mention the addition of a robot onsite that would result in an increment of

health and safety issues due to high force robotic arm. To address the issue,

physical or sensor-based barriers are frequently used in robotic automation

systems to prevent any potentially dangerous situations when humans and robots

interact. (Paneru

and Jeelani, 2021) also states

that the potential approaches of machine vision is presented to improve the

health and safety monitoring practices. Machine vision technology can be

applied to the development of obstacle detection, which brings the ability to

detect obstacles or humans around the robot. This can greatly reduce the health

and safety issues while interact with the robot onsite as the robot is able to

determine the presence around it.

For software configuration, Amazon Web Services (AWS) is used for software integration as it provides various services ranging from cloud servers to IoT platform services. With the aid of AWS, one of the services, IoT Greengrass provides the edge computing implementation to the Raspberry Pi; thus, the Raspberry Pi is able to communicate with the cloud while having the benefit of local processing while offline. The collected data from the sensors will be fed into the AWS IoT Core and S3 bucket in different protocols for different uploading methods. Then these data will be fed to the AWS IoT SiteWise using microservices called AWS Lambda to route the data to selected cloud services. IoT SiteWise is able to collect, organize and analyze data from the Raspberry Pi itself. Furthermore, AWS IoT Twinmaker is integrated into the system, where the digital twin is developed by importing the 3D model of the robot and the construction site. With the integration of IoT Greengrass, IoT Core, IoT SiteWise, and IoT Twinmaker, the digital twin of the Raspberry Pi (mimicking Jaibot) can be represented in the Grafana dashboard, along with the internal data such as video stream, detected distance, robot status, and other time-based data. The involvement of Machine Learning has provided the predictive maintenance feature and forecast the time-based data for the robot use cases.

Figure 1 System Block Diagram

There will be three major systems surrounding the overall project which

are:

·

Remote

data collection system

·

Predictive

maintenance system

·

Obstacle

detection & and avoidance system.

Digital twin technology creates virtual models of

physical objects, like our robot. Unlike simulations, digital twins are

real-time interactive environments. Sensors on the physical object gather data

and transmit it to the digital twin, enabling real-time optimization,

performance monitoring, problem identification, and solutions testing without

real-world risks.

In predictive maintenance, we utilize

LSTM for feature extraction from Big Data comprising multi-sensor parameters.

Deep learning on high-dimensional information aids Remaining Useful Life (RUL)

prediction. Here are the LSTM model equations shown in equation 1-6:

|

|

|

where

In theory, the data is

preprocessed before the training process by using normalization processing to

reduce noise and prediction error. After the training of data, the mapped HI is

conducive to the evaluation of the health status. Furthermore, a degradation

curve within the service life is produced, and the RUL is predicted. To

calculate the evaluation indexes of the trained model, RSME, MAPE, and

|

|

|

The implementation of the

ultrasonic sensor is associated with the feature of detecting and avoiding

obstacles. In theory, similar to radar, the fundamental working principle of

ultrasonic sensors is to emit high-frequency sound waves with an emitter and

receive the reflected sound waves with a receiver. Based on the time required

for the sound waves to be reflected back, the distance can be calculated using equation

10, where T is the time required, and C is the speed of sound.

|

|

The speed of sound, typically 343m/s at 20°C, varies with temperature and humidity. Ultrasonic sensors enable the robot to detect obstacles, even transparent ones like clear plastic. AWS IoT Twinmaker creates digital twins of real-world systems, enhancing operations. Using existing data and 3D models, the Jaibot and the construction building are input into the IoT Twinmaker scene in this project (see Figure 2).

Figure 2 AWS IoT

Twinmaker Scene & 3D Model Jaibot

Figure 2 illustrates the 3D

environment of the developed digital twin of a Jaibot and the site scene. The 3D model of the Jaibot and the environment is

inserted in AWS Twinmaker, where the 3D environment is generated accordingly. The 3D models, along with the lighting, can be

transformed into different positions, rotations, and scales according to the

use cases in the scene for representing the real Jaibot in a remote

construction site.

The LSTM predictive maintenance model is trained using MATLAB (Figure 3). Four out of five vibration datasets (X, Y, Z) are used to train, test, and validate the model, aiming to monitor drill bit abnormalities during long working periods. Input data is pre-processed, split into sequences, and model architecture is configured with editable parameters. Performance is evaluated using RMSE and MAPE values, and the predicted results are compared against the actual results. Tuning involves adjusting hyperparameters to prevent overfitting while maintaining a balance between data and model architecture.

Figure 3 LSTM

Predictive Maintenance Model

Testing and Evaluation

The first test is experimented with the

method of data uploading in order to indicate the time required to upload the

IoT data to the cloud. According to the block diagram, there are two different

methods of sending the data to the cloud, which are implementing MQTT and HTTPS

protocols for JSON files and CSV files. In this test, the time for uploading

the data to the cloud for each method is collected and tabulated into the table

below by using the code of the response.elapsed.total_seconds().

Table 1 Efficiency

Test of Different Uploading Method

|

Attempts |

MQTT protocol - JSON file (second) |

HTTPS protocol - CSV file (second) |

|

1 |

1 |

1.2381 |

|

2 |

1 |

1.2154 |

|

3 |

1 |

1.6699 |

|

4 |

1 |

1.2112 |

|

5 |

1 |

1.1709 |

|

Average |

1 second |

1.3011 seconds |

According to the results shown in Table

1, the MQTT protocol takes less time to upload data to the cloud than the HTTPS

protocol. However, it should be mentioned that the HTTPS protocol has a higher

efficiency than the MQTT protocol. This is because the MQTT protocol can only

upload a single batch of data to the cloud at a time, whereas the HTTPS

protocol can send a single or multiple batches of data to the cloud. Moreover,

since the use case of the robot is designed to operate in a remote environment,

the HTTPS protocol would be a greater approach for data uploading to the cloud

after an internet connection is available.

The second test

experimented on the data uploading speed on different sizes of the CSV file

through HTTPS protocol. Since the use case of the robot is mainly operated in a

remote area where the connection is unstable, therefore the data is most

probably initially stored locally inside the robot until the robot has a stable

internet connection. The uploading speed of the data is essential to the

digital twin of the robot in a virtual environment where the users want to

manage and optimize real-time data. Thus, this testing is conducted to identify

the size of the data affecting the uploading speed.

Table 2 displays the experimental

results for data uploads utilizing the HTTPS protocol for varied data sizes

ranging from KBs to MBs. Despite the large disparity in data volumes, the

upload speed for both instances remained under 2 seconds on average. This

implies that while data size has an impact on the upload speed, the impact is

not significant enough to affect the speed in terms of seconds.

Table 2 Speed

Test of Different Data Size

|

Attempts |

Small-sized data (second) |

Large sized data (second) |

|

1 |

1.4540 |

2.0931 |

|

2 |

1.1757 |

1.7493 |

|

3 |

1.3733 |

1.8037 |

|

4 |

1.2669 |

1.8467 |

|

5 |

1.2225 |

1.8408 |

|

Average |

1.2985 seconds |

1.8667 seconds |

The third testing is conducted on testing the video

streaming features where the process would experience some delay due to various

factors such as video resolution, video format, type of encoder, and more. In

the system implementation, the video is streaming with the H.264 (AVC) encoder,

as it is a video compression standard used in digital video content. Likewise,

the H.265 (HEVC) encoder works the same way as H.264 but is newer and more

advanced in several ways. Thus, in this testing, these two encoders will be

compared in terms of bandwidths, streaming time delay, frame rate, and

allocated storage byte size.

Table 3 reveals differences between H.264 and H.265 encoders. H.265

excels in bandwidth and storage efficiency, while H.264 suits real-time video

streaming. For this project, H.265 is preferred for remote operations with

limited bandwidth and storage. Testing four focuses on LSTM model accuracy for

system failure prevention. Fine-tuning training options aim to minimize RMSE

and MAPE values, ensuring high accuracy. Table 4 shows that increasing LSTM

layer size and epochs generally improves prediction performance for smaller

data sizes. Performance varies among output variables, with X being the most

challenging to predict. Results indicate the LSTM model's suitability varies

based on data and tasks. In the final test (Testing 5), obstacle detection and

avoidance system reaction time are assessed in stopping movement within

threshold values.

Table 3 Testing

Between H.264 and H.265 Encoder

|

Parameters |

H.264 |

H.265 |

|

Bandwidths (kbps) |

236.14 |

120.11 |

|

Time delay (s) |

9.2 |

9.8 |

|

Frame rate (fps) |

14.2473 |

14.4481 |

|

Allocated storage byte size (kb) |

136.07 |

90.77 |

In five tests, the system achieved an average reaction time of 0.146 seconds and covered an average distance of 5.22 cm. These results demonstrate the system's swift response to detected obstacles, ensuring quick recognition and avoidance. The short stopping distance enhances safety by preventing collisions and potential damage. Overall, the system proves its effectiveness in recognizing and reacting to obstacles, enhancing secure robotic navigation.

Table

4 Model Accuracy Test

|

Data

Size |

LSTM

Layer |

Epochs |

RMSE

(%) |

MAPE

(%) | ||||

|

X |

Y |

Z |

X |

Y |

Z | |||

|

100 |

100 |

100 |

108.99 |

31.46 |

34.85 |

305.14 |

38.32 |

75.03 |

|

200 |

118.06 |

32.65 |

37.79 |

438.26 |

44.23 |

100.44 | ||

|

300 |

158.60 |

36.03 |

37.90 |

631.28 |

52.44 |

83.60 | ||

|

200 |

100 |

90.30 |

31.46 |

33.89 |

229.82 |

40.36 |

70.12 | |

|

200 |

136.00 |

33.69 |

37.96 |

486.48 |

44.45 |

75.27 | ||

|

300 |

138.80 |

36.54 |

38.77 |

450.49 |

54.11 |

103.90 | ||

|

300 |

100 |

106.12 |

32.75 |

37.00 |

322.14 |

43.74 |

80.07 | |

|

200 |

124.38 |

32.42 |

40.73 |

381.41 |

41.67 |

86.61 | ||

|

300 |

146.11 |

40.19 |

42.01 |

551.99 |

52.16 |

87.59 | ||

|

200 |

100 |

100 |

81.52 |

30.07 |

33.43 |

261.42 |

67.84 |

82.18 |

|

200 |

84.46 |

31.31 |

34.26 |

258.78 |

56.69 |

82.39 | ||

|

300 |

81.99 |

29.25 |

34.68 |

280.65 |

75.67 |

87.39 | ||

|

200 |

100 |

81.38 |

31.35 |

34.40 |

266.80 |

67.88 |

80.56 | |

|

200 |

80.66 |

34.28 |

34.77 |

273.22 |

97.70 |

86.36 | ||

|

300 |

86.53 |

30.81 |

37.76 |

298.83 |

82.35 |

89.73 | ||

|

300 |

100 |

81.90 |

31.32 |

34.26 |

243.87 |

62.38 |

79.10 | |

|

200 |

83.47 |

30.18 |

33.8 |

290.32 |

63.83 |

81.27 | ||

|

300 |

103.01 |

33.70 |

39.09 |

400.85 |

84.13 |

96.63 | ||

|

500 |

100 |

100 |

88.38 |

37.29 |

36.15 |

246.94 |

52.29 |

154.90 |

|

200 |

87.12 |

36.92 |

36.54 |

244.42 |

50.33 |

144.89 | ||

|

300 |

89.36 |

37.20 |

38.31 |

264.40 |

54.93 |

147.65 | ||

|

200 |

100 |

86.86 |

38.11 |

35.48 |

239.66 |

53.84 |

144.31 | |

|

200 |

86.87 |

37.12 |

38.26 |

247.33 |

51.73 |

161.45 | ||

|

300 |

90.74 |

37.42 |

38.41 |

273.70 |

58.02 |

129.42 | ||

|

300 |

100 |

86.48 |

37.67 |

36.33 |

239.58 |

52.55 |

141.03 | |

|

200 |

86.22 |

36.91 |

37.65 |

249.87 |

53.06 |

152.47 | ||

|

300 |

88.36 |

37.04 |

37.54 |

259.24 |

51.79 |

140.31 | ||

|

1000 |

100 |

100 |

83.22 |

34.61 |

33.74 |

426.07 |

68.09 |

172.18 |

|

200 |

81.30 |

34.39 |

34.72 |

404.03 |

64.07 |

158.82 | ||

|

300 |

81.57 |

34.30 |

33.80 |

385.22 |

57.86 |

139.80 | ||

|

200 |

100 |

81.88 |

35.46 |

33.83 |

414.41 |

64.39 |

163.48 | |

|

200 |

81.72 |

35.14 |

34.54 |

405.75 |

60.09 |

145.73 | ||

|

300 |

81.90 |

35.07 |

34.54 |

399.05 |

58.83 |

134.06 | ||

|

300 |

100 |

82.32 |

35.40 |

34.93 |

421.13 |

62.30 |

175.77 | |

|

200 |

82.13 |

35.68 |

33.70 |

387.94 |

59.19 |

134.69 | ||

|

300 |

80.42 |

35.21 |

33.04 |

369.45 |

53.18 |

129.31 | ||

After

the data is imported, the data will be displayed in the dashboard, where it is

shown in Figure 4. The left side of the dashboard shows the parameters of date

and time, average CPU temperature, and CPU speed. In addition, the values from

the ultrasonic sensors, along with an obstacle alarm, are also displayed on the

other side of the dashboard. Video stream from KVS and 3D scenes from IoT

Twinmaker are also imported into the dashboard, thus; the user is able to view

the camera feed from the robot and the digital twin of the robot in virtual

environment.

Table 5 Obstacle

Detection & Avoidance Precision Test

|

Attempts |

Reaction time (second) |

Travel distance after the trigger of

ultrasonic sensor (cm) |

|

1 |

0.13 |

5.0 |

|

2 |

0.15 |

5.3 |

|

3 |

0.15 |

5.3 |

|

4 |

0.16 |

5.2 |

|

5 |

0.16 |

5.3 |

|

Average |

0.146 |

5.22 |

The LSTM model successfully predicts three output variables (X, Y, and Z) using three input variables. Despite achieving successful predictions in Figure 5, varying RMSE and MAPE values are observed. The model is configured with 500 data size, 200 LSTM layers, and 200 epochs, resulting in different accuracy levels: X (RMSE 77.80%, MAPE 242.20%), Y (RMSE 31.10%, MAPE 69.70%), and Z (RMSE 34.53%, MAPE 82.74%). Notably, Y and Z data exhibit high MAPE values, possibly due to their data's inconsistency. To enhance accuracy, using more complex models with increased data may mitigate this issue, boosting overall model performance.

Figure 4 Grafana Dashboard Console

Figure 5 Plot of Actual and Forecast Data

In summary, this project aimed to enhance Jaibot's

capabilities through machine learning, machine vision, and IoT, with three key

objectives: implementing predictive maintenance, developing obstacle detection

with ultrasonic technology, and rigorously evaluating system reliability,

encompassing real-world performance factors such as speed, accuracy, and

durability. Beyond these achievements, the project holds broader significance,

as integrating IoT into Jaibot creates opportunities in logistics, agriculture,

and healthcare, with future directions potentially involving advanced AI

algorithms and renewable energy sources to expand operational capabilities. The

challenges identified in this project serve as inspiration for further

innovation in robotics and IoT. In conclusion, our project not only met its

objectives but also demonstrated the transformative potential of IoT in

autonomous robotics, positioning Jaibot's enhancements as a catalyst for

progress, promising a safer and more efficient future in robotics and beyond.

The authors

would like to express gratitudes to Hilti IT Asia for providing supports in

fulfilling this research work.

Brosque, C., Fischer, M., 2022. Safety, Quality,

Schedule, And Cost Impacts Of Ten Construction Robots. Construction

Robotics, Volume 6(2), pp. 163–186

Carvalho, T.P., Soares, F.A., Vita, R.,

Francisco, R.D.P. Basto J.P., Alcalá, S.G., 2019. A Systematic Literature

Review Of Machine Learning Methods Applied To Predictive Maintenance. Computers

& Industrial Engineering, Volume 137, p. 106024

Chin, C.G., Jian, T.J., Ee, L.I., Leong, P.W.,

2022. IoT-Based Indoor and Outdoor Self-Quarantine System for COVID-19

Patients. International Journal of Technology, Volume 13(6), pp. 1231–1240

Chong, W.L., Lau, C.Y., Lai, N.S., Han, P.T., 2022.

Automated Visualization od 2D CAD Drawings to BIM 3D Model Using Machine

Learning with Building Preventive Maintenance Modelling System. Res

Militaris, Volume 12(4), pp. 1214–1228

Fabiani, C., Longo, S., Pisello, A.L., Cellura,

M., 2021. Sustainable Production and Consumption in Remote Working Conditions

Due to COVID-19 Lockdown in Italy: an Environmental and User Acceptance

Investigation. Sustainable Production and Consumption, Volume 28, pp.

1757–1771

Jonny, Kriswanto, Toshio, M., 2021. Building

Implementation Model of IoT and Big Data and Its Improvement. International

Journal of Technology, Volume 12(5), pp. 1000–1008

Lee, D., Lee, S., Masoud, N., Krishnan, M.S., Li,

V.C., 2022. Digital Twin-Driven Deep Reinforcement Learning For Adaptive Task

Allocation in Robotic Construction. Advanced Engineering Informatics,

Volume 53, p. 101710

Lee, K., Shin, J., Lim, J.Y., 2021. Critical

Hazard Factors in The Risk Assessments of Industrial Robots: Causal Analysis and

Case Studies. Safety and Health at Work, Volume 12(4), pp. 496–504

Ma, X., Mao, C., Liu, G., 2022. Can Robots

Replace Human Beings?—Assessment on The Developmental Potential of Construction

Robot. Journal of Building Engineering, Volume 56, p. 104727

Omar, H., Mahdjoubi, L., Kheder, G., 2018.

Towards an Automated Photogrammetry-Based Approach for Monitoring and

Controlling Construction Site Activities. Computers in Industry, Volume

98, pp. 172–182

Paneru, S., Jeelani I., 2021. Computer Vision

Applications in Construction: Current State, Opportunities & Challenges. Automation

in Construction, Volume 132, p. 103940

PwC, 2016. Industry 4.0: Building the Digital

Enterprise. Available online at: www.pwc.com/industry40. Accessed on 16th

September 2022

Rabbani, N.A., Foo, Y.-L, 2022. Home Automation

to Reduce Energy Consumption. International Journal of Technology, Volume

13(6), pp. 1251–1260

Shen, L.J., Lukose, J., Young,

L.C., 2021. Predictive Maintenance On An Elevator System Using Machine Learning.

Journal of Applied Technology and Innovation, Volume 5(1), p. 75

Thea, S.S., Lau, C.Y, Lai, N.S., 2022. Automated

IoT Based Air Quality Management System Employing Sensors and Machine Learning.

Journal of Engineering Science and Technology, Volume 2022 pp. 172–184

Villani, V., Pini, F., Leali, F., Secchi C., 2018.

Survey on Human–Robot Collaboration in Industrial Settings: Safety, Intuitive

Interfaces and Applications. Mechatronics, Volume 55, pp. 248–266

Yet, J.Y.,

Lau C.Y., Thang, K.F., 2022. 2D Orthographic Drawings to 3D

Conversion using Machine Learning. In: 2022 3rd International

Conference on Smart Electronics and Communication (ICOSEC), pp. 1308–1312