Target Area Georeference Algorithm for Evaluating the Impact of High-Speed Weapon

Published at : 25 Mar 2025

Volume : IJtech

Vol 16, No 2 (2025)

DOI : https://doi.org/10.14716/ijtech.v16i2.5886

Infantono, A, Ferdiana, R & Hartanto, R 2025, ‘Target area georeference algorithm for evaluating the impact of high-speed weapon’, International Journal of Technology, vol. 16, no. 2, pp. 536-550

| Ardian Infantono | 1. Department of Electrical Engineering and Information Technology, Universitas Gadjah Mada, Grafika Street of No 2, Yogyakarta, 55281, Indonesia 2. Department of Defense Aeronautical Engineering, Ak |

| Ridi Ferdiana | Department of Electrical Engineering and Information Technology, Universitas Gadjah Mada, Grafika Street of No 2, Yogyakarta, 55281, Indonesia |

| Rudy Hartanto | Department of Electrical Engineering and Information Technology, Universitas Gadjah Mada, Grafika Street of No 2, Yogyakarta, 55281, Indonesia |

Evaluation of air-to-ground shooting exercises using Weapon Scoring System (WSS) is very important for the military to achieve Network Centric Warfare (NCW). Effective NCW requires WSS that can quickly and accurately evaluate all personnel shooting from several heading directions in real-time. Most current monitoring system used in training fighter only aims to assess the ability of pilot. Additionally, the system focuses on the position of the impact point of bomb/rocket explosions in the shooting range, typically fired from a single heading direction. However, the development of WSS currently faces several challenges, including the suboptimal production of bomb/rocket trajectories by single camera calibration algorithm for the target shooting area, lack of synchronization with geo-coordinates (latitude, longitude), and inability to evaluate shots from various directions. Therefore, this research aimed to develop a Target Area Georeference Algorithm (TAGA) using computer vision, specifically 12-03-06-09 elliptical calibration, to monitor and evaluate air-to-ground shooting exercises with high-speed weapons. The result successfully identified the position of bomb/rocket explosion point, its distance from the center point, the angle of the rocket, and the accuracy of bomb/rocket trajectory to the impact point according to geo-coordinates, based on variations in shot headings. Typically, TAGA facilitated the evaluation of the capabilities of various participants in the exercise, including the pilot's ability, the aircraft preparation team, the weapons team, the aircraft's avionics system, as well as the quality of air weapons and bombs/rockets. Finally, the algorithm contributed to accelerating the realization of NCW by providing more accurate shot analysis, which could be quickly communicated to the Commander and all training components, regardless of their location.

Bomb and rocket; Computer vision; Georeference; Trajectory; Weapon scoring system

The development of military training system has advanced significantly

from traditional methods to the current era of the Industrial Revolution 4.0.

In this era, battle technology has been enhanced using various methods to

achieve Network Centric Warfare (NCW) capabilities. Generally, combat training

in the military is a mandatory and very important activity, with shooting

exercise being the most dominant. These exercises include the use of heavy

equipment such as tanks, fighter aircraft, helicopter gunships, warships, and

missile launchers. It is also important to be aware that the shape and type of

target used during training also vary. Typically, the assessment of shooting

training in the military is conducted using various methods, ranging from

conventional to fully automatic. The conventional assessment comprise directly

observing the impact on the shooting target. Subsequently, virtual environments

are increasingly being used to provide training for military applications.

Similar to other sectors, the military requires more robust autonomous

technology through the digital transformation of existing industries (Berawi, 2021). Zeng et al. (2021) proposed a method for generating

combat system-of-systems simulation scenarios based on semantic matching (Zeng et al., 2021). Many industrial

sectors are experiencing digital transformation due to advances in information

and data technology, increased use of computers, and automation with

intelligent systems (Berawi,

2020). Military training in each country is considered very expensive but it

remains a budgetary priority. Furthermore, the high cost of military training

is due to the variable time and risk included, as each exercise requires

operating defense equipment, including combat vehicles, various types of

weaponry, and ammunition. Several technological systems have been created to

support military training, such as (Lábr and Hagara, 2019), although most training is still conducted conventionally. Otherwise,

weapon-target assignment problem need to be solved, and one of them implemented

PNG law, and the greedy heuristic algorithm to achieve the assignment (Lee et al.,

2021). This is because the training field often lacks the necessary sensors to

support advanced combat training. The support aims to achieve cost efficiency

and increase the ability of soldiers quickly. The other work to implement a

real-time system based on computer vision had been introduced, in order to

detect various categories of firearms in public spaces, ie. streets, ATMs,

among others (Ramon and Barba Guaman, 2021). A study had been reported attempting to balance the trade-off by using

algorithms like YOLOv5, RCNN, and SSD (Tamboli et al., 2023). Other, a system based on a deep learning model YOLO V5 for weapon

detection also been proposed to increase sufficiently of affine, rotation,

occlusion, and size (Khalid et al., 2023). Meanwhile, previous research

also used similar approach to detect weapon impact by using computer vision (Infantono et al., 2023). To assist

military parties in selecting appropriate technology to monitor, assess, and

evaluate combat and other military exercises, it is necessary to present

academic overview of technological development in military training system.

Additionally, (Piedimonte and Ullo, 2018) showed that budget constraints and

HR reductions, along with the increasing complexity of management and control

systems, have prompted large organizations to pursue new methodologies. These

methodologies are intended to support these systems during in-service phase. The exercise of firing aircraft rockets from the air to ground is conducted to

improve the professionalism of the

fighter pilot. Additionally, the training is held in a designated area known as Air

Weapon Range (AWR).

In the context of AWR, the primary objective of the exercise is to

determine the impact point of rocket. Determining final position

coordinates for a rocket impact point on the shooting range is similar to

tracking moving objects. Some motion detection techniques in video processing

have been presented (Bang, Kim and Eom, 2012; Ghosh et al., 2012; Yong et al., 2011; Hoguro et al., 2010; Liebelt and Schmid, 2010). Furthermore, exploration of the image processing

technique is implemented in an assessment system called Aerial Weapon Scoring

System (AWSS). The current AWSS uses cameras (Meggitt Defense Systems, 2024) and (AWSS, 2024), and each is positioned

far from shooting range. The combat aircraft has to shoot the target using its

source of shooting, located between two cameras, as shown in Figure 1(a). Two

3CCD cameras are installed on the fixed structures, such as an observer tower

and a remote tower. In addition, the system produces information about rocket

impact point, which is crucial for a fighter pilot to make decisions. The

current implementation of scoring system is based on the research of (Wahyudi and Infantono, 2017). The explorers continued to develop

the visualization system using Augmented Reality using ARoket (Infantano and Wahyudi, 2014). Others, weapon scoring system

implemented radar system (Høvring, 2020) and acoustic method (Bel, 2024). The rocket accuracy

scoring result will be sent in real-time to the pilot for learning to get more

understanding. When the results show any unpredicted error or mistake during

the shooting, the pilot can use this information to correct for the next shot.

However, this process poses a problem for the pilot, as learning anything from

these unpredicted errors and mistakes is not visible. Following the previous

paragraph, finding a solution for these errors and mistakes is crucial.

Therefore, this research proposes a new model of aircraft rocket

image-capturing system. This model introduces new variables such as angle and

trajectory of rocket along with more complex infrastructures including camera

and portable observer. By knowing angle of rocket and its trajectory, it

becomes possible to predict the cause of shooting errors as well as mistakes,

and the prediction is performed by a computing system. The problem with the 3D

Mixed Reality proposed by (Wahyudi and Infantono, 2017) is that it cannot visualize the

movement of rocket without knowing the direction of the shots, as it lacks

information on the direction of the aircraft heading except for 215 degrees. In

simpler terms, the Mixed Reality visualization showed the rocket launch from an

approximate point always heading towards 215 degrees. The only difference is

the impact coordinate data, the distance of rocket impact from the target

center point, and the angle of the shot. The process supports the training

pattern allowing the aircraft to fire rockets from any position during

maneuvering. This issue leads to an inaccurate evaluation of the exercise by

Training Command personnel. In addition, the problem arises because the WSS is

not designed to specifically identify the coordinates of rocket (latitude and

longitude) as it moves toward the target. This limitation could be due to the

camera view calibration technique used in the target area from the research of (Infantono et al., 2014). Faraji,

et al. (2016) introduced a fully automatic georeferencing process that

does not require a Ground Control Point (GCP). However, this method cannot yet

be applied to detect high-speed rockets or bombs. (Faraji et al., 2016). Even when the WSS could produce

coordinate values for the position of

rocket at launches, the 3D Mixed Reality reported by Wahyudi and Infantono has

not been accompanied by a method for synchronizing the earth coordinates

(latitude and longitude) in real-time (Wahyudi and Infantono, 2017). In the previous method, the final

calculation determines the distance from the blast point to the center point in

pixels. To compare these results with Range

Safety Officer (RSO) assessment, it is necessary to convert pixels to meters. Furthermore,

the conversion will be adapted to the context of the proposed method. The

initial dimensions of the image acquisition in the experiment are 1280 pixels

(width) and 720 pixels (height), with a resolution of 96 pixels/inch or 37.795

pixels/cm. The conversion requires comparing the dimensions of the object in

the image with the actual dimensions. Meanwhile, changing the image dimensions

to 640 pixels (width) and 360 pixels (height) does not alter the resolution. A research also presented viewpoint

invariant features from single images using 3D geometry (Cao and McDonald, 2009). A proposal was made to perform a partial 3D

reconstruction where the spatial layout of buildings is represented using

several planes in 3D space. In related works, (Zhao and Zhu, 2013) proposed a 3D method to process motion

class. (Wang et al., 2012) concluded that while the visual system tries to minimize

velocity in 3D, earlier disparity processing strongly influences the perceived

direction of 3D motion. Chouireb and Sehairi presented a

performance comparison of background subtraction methods across video

resolutions on various technologies and boards (Chouireb and Sehairi, 2023). The tested boards were equipped with different versions of ARM multicore

processors and embedded GPUs (Talarico

et al., 2023). Combat training of a

country includes defense equipment, soldiers, and supporting technology to

monitor and measure its capabilities. Several advances to support military

technology have been achieved, such as training simulation technology. Wei and Ying (2010) researched an

automated scoring system for simulation training, presenting the basic

principles and general methodology for building such a system. (Talarico et al., 2023) identified whether

static marksmanship performance, speed of movement, load carriage, and

biomechanical factors while shooting on the move influenced dynamic

marksmanship performance. Some findings also informed the weapons detection by

applying the deep learning algorithms (Rehman

and Fahad, 2022), (Yeddula

and Reddy, 2022) and (Bhatti

et al., 2021). The importance of understanding the

trajectory of a missile or rocket is shown by Nan et al. (2013), who conducted preliminary research on

the optimization problem of aircraft weapon delivery trajectories. Furthermore,

a strong and efficient method was created for aircraft trajectory optimization

under complex mission environments. This method works well on the tested

mission scenarios and the main feature of the method is that it converts

trajectory optimization into parametric optimization problems. The conversion

solves the problems with well-decided numerical optimization techniques.

Moreover, the method can be applied to other trajectory optimization cases with

different tactical template formulations. The scale of a combat exercise is also measured by the process of operating

weapon as well as aiming it at the object and this process is closely related

to the Command and Control functions. Leboucher et al. (2013) reported on a two-step optimization method for dynamic Weapon

Targeting problems. Furthermore,

Weapon Target Assignment (WTA) system assigns a suitable weapon to defend an

area or asset from an enemy attack. Due to the uniqueness of each situation,

these issues should be solved in real time and adapted to the developing air/ground

situation. Research of a multi-grade remote target-scoring systems based on

integration of McWiLL and RS-485 Networks had been proposed (Xin et al., 2014). The effectiveness of military

exercises is also reviewed by the success of coordination and readiness of the

troops included. In the current weapon delivery exercise, WISS system only

detects and shows the impact position of bomb/rocket on the target. This

training system ignores the trajectory and behavior of bomb/rocket when

launched from a fighter plane. In addition, the behavior is very important for

a comprehensive understanding of all factors influencing the success of combat

exercise which includes personnel, aircraft, and weapons. Scientifically, WISS

does not track the trajectory and angle of bomb/rocket shot as it approaches

the object due to high speed. According to the experience of pilot when firing

rockets, aircraft speeds reach 400 knots (205,778 m/s) and rocket can initially

travel at 600 m/s. One of the factors affecting

these conditions is the initial calibration between the camera and the target

area. Furthermore, proper calibration is essential for accurately representing

real conditions and serves as a benchmark for analyzing and evaluating the

general exercise. This evaluation includes the impact point of bomb/rock,

capability, quality, and characteristics of weapon, as well as the performance

of all personnel included. However, the 3D-Mixed Reality research by Wahyudi and Infantono (2017), which builds on the 3D-View Geometry research by (Infantono et al., 2014), does not use a calibration system for accurate initial

data from the cloud. The previous study proposed a method of

Clockwise-elliptical calibration using computer vision to monitor and evaluate

shooting exercises of air to ground (Infantono et al., 2024). Further research is needed to enable the system to visualize the process and results of shots at a speed

close to real-time.

Detection of the trajectory and

angle of bomb/rocket shot as it approaches the target before impact is necessary.

Based on introduction, it is important to analyze the results of WSS in

military exercises to identify areas for improvement and improve the quality of

exercises that support combat readiness. The research is organized in the

following manner namely, Introduction, Proposed Methods, Results and

Discussion, and Conclusions.

2.1. Proposed WSS positioning

The planned method of the sensor positioning in the research was modified. Subsequently, the placement of the camera sensor was adjusted to produce an interpretation of latitude and longitude coordinates based on video processing results shown in Figure 1. The camera position was not always perpendicular to rocket/bomb fire from the fighter aircraft. For example, camera A1 and A2 were positioned on portable tower A, camera B on portable tower B, and camera C1 and C2 on portable tower C. This method allowed the implementation of exercises that did not require permanent infrastructure, replacing permanent towers with portable ones. Similarly, the process monitoring system and training results were conducted in Vehicle Command Post (VCP). This setup allowed the direction of fire from fighter aircraft to be monitored from several positions.

Figure 1 Proposed position of camera sensor.

The Graphical User Interface (GUI) design of the proposed research scoring system was shown in Figure 2. There were some buttons on the left side, and the background process were showed on the right side including two little windows to show the results.

Figure 2 GUI design of proposed research

2.2. Proposed Method of Target Area Georeference Algorithm (TAGA)

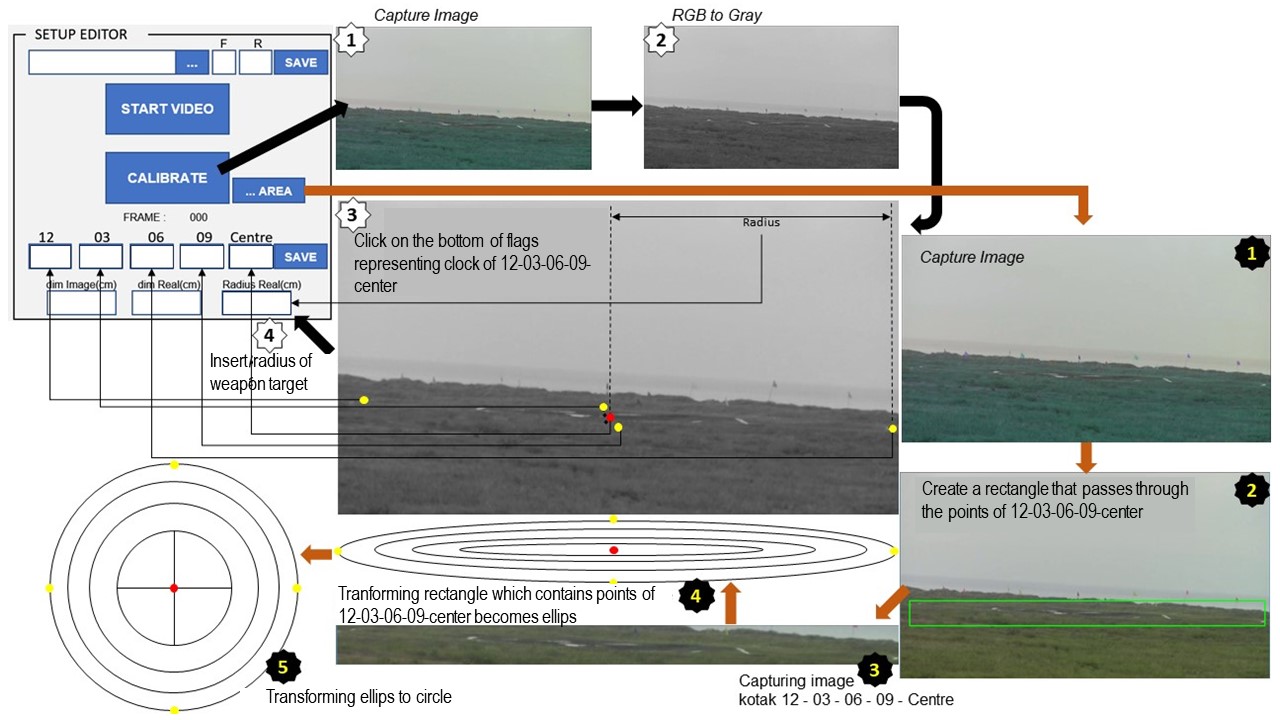

Camera calibration of the shooting target area was conducted to produce information on the launch of rocket/bomb and accurate positioning of the impact point. In addition, this calibration method aimed to produce accurate latitude and longitude coordinates of rocket/bomb when captured on camera until it hits the ground as shown in Figure 3.

Figure 3 Calibration steps of TAGA

The algorithm presented in this research was written in pseudocode using the Python programming language. Additionally, this was performed to facilitate the development of real programs.

2.2.1. Start Video Button

Camera calibration of the shooting target area was performed to produce information about the launch of rocket/bomb and achieve a more accurate positioning of the impact point. Loading video was conducted by two kinds of movie source namely,

Video from the recording from a single camera

Video from IP (Internet Protocol) Camera (real-time source).

2.2.2. CALIBRATE Button

The main steps of calibrating the 12-03-06-09-center ellipse were performed by pressing the CALIBRATE command button, which included some procedures. First, ‘RGB to Gray’, second, ‘Click to Select 5 Points position’, and third ‘Input the radius of target.

a. RGB to Gray

The next step was to convert RGB image to Gray, to optimize the computation speed where RGB to Gray pattern was used as follows.

GRAY = 0.2989 * R + 0.5870 * G + 0.1140 * B

b. Click to Select 5 Points Position

The step was crucial and required to be carefully handled by clicking on the point according to the placement of the flags representing the clockwise direction 12-03-06-09-center. The position of 5 flags which represented 12-03-06-09-center was observed from the target view from heading position of fighter pilot. However, clicking procedure was arranged as a clockwise rotation, as follow.

1. Click on flag of 12 (twelve) o’clock.

2. Click on flag of 3 (three) o’clock.

3. Click on flag of 6 (six) o’clock.

4. Click on flag of 9 (nine) o’clock.

5. Click on flag of center target.

c. Input the radius of the target circle

The input value given was in centimeter units and the diameter of the target area in AWR was 100 meters, therefore, the input radius measured 5000 centimeters.

2.2.3. AREA Button

The next step was to perform area-specific calibration by pressing the AREA button. This included some procedures such as, Firstly ‘Capturing Image’. Secondly was ‘Select a square area that passed through the point of 12-03-06-09 o’clock and center point of aim. Thirdly was ‘Find Area’, and fourthly, ‘Capturing an image on box 12-03-06-09-Center’. Finally, Transform the box 12-03-06-09-center into ellipse and ellipse into circle.

a. Capture Image

Capture Image step included selecting and determining an image frame captured from a real-time video or streaming IP camera. The captured image had to contain the entire circular area designated as the target. In addition, the captured image had to contain four flag points representing five positions, namely 12 o'clock, 3 o'clock, 6 o'clock, and 9 o'clock, as well as one target center point. The five positions were determined based on the view of the target of the pilot when shooting, whether bombing or rocketing.

b. Select a Square area that passed through the point 12-03-06-09-center

The program selected a Square box that passed through the point 12-03-06-09-center and OpenCV read the file 0.JPG using the following pattern.

r1 = 900.0 / SELF.CROPIMAGE.SHAPE [1]

dim1 = (900, INT(SELF.CROPIMAGE.SHAPE[0] * r1))

The shape of cropped image was a rectangle that surrounded the points of 12-03-06-09-center flags resized after clicking and interpolation from dim1. A copy of the image was required, hence, the mouse function was used to rename the window, before moving from the original position to the coordinates (40,30).

The program kept looping until the “q” key was pressed, while this condition was true, it showed the image and waited for a key press which showed the results of cropping the image. Furthermore, “r” key was added to reset, when pressed, the program reset the cropping region. After that, the program continued to clone the image, and “c” key was also added to cut/break from the loop. When there were two reference points, the program continued to crop the region of interest(ROI) from the image and showed it. When the length of the reference point was 2, ROI cloned the Point Reference and later showed the image as ROI.

[[refPt[0][1]:refPt[1][1], refPt[0][0]:refPt[1][0]]

When the image was being shown, the Reference Point was printed as

refPt[0][1], refPt[1][1], refPt[0][0], refPt[1][0]

The program produced the CROP.TXT file to record the Point References. After that, the program showed the print status "FILE HAS BEEN CREATED" and later showed the data retrieved from the database. Specifically, program opened CROP.TXT file and closed all open windows.

c. Find Area

In this phase, the program for finding area was started by variable declaration to determine PointX, PointY, pusatX, and pusatY in integer type. The implementation was to achieve PointX, PointY, PusatX, and PusatY. After PointX, PointY, PusatX, and PusatY were determined, program opened and produced CROP.TXT file and later, the pattern was as follows.

eq = ((x-pusatX)*(x-pusatX)/(newA*newA)) + ((y-pusatY)*(y-pusatY)/(newB*newB))

d. Capturing image on box 12-03-06-09-Center

The next step included capturing the image on the box, including the inner area of 12 o’clock, 03 o’clock, 06 o’clock, and 09 o’clock, as well as center of target and this process included clicking and cropping procedures. The step was started by the variable declaration Variable X, Y, the Flags and used the Float and INT data type. This step aimed to obtain references to the global variables.

When the left mouse button was clicked, the starting coordinates (x, y) were recorded, showing that cropping was being performed. Left mouse button was required to be checked to know when the button was released. After that, the ending coordinates (x,y) were recorded, showing that the cropping operation was finished. The next step was drawing a rectangle around the ROI using CV command, with the border color set to (0, 255, 0) and last step showed cropped image.

e. Transform the box 12-03-06-09-center into ellipse and ellipse into circle

The process of converting pixels to meters accommodated the various conditions previously described, especially in this experiment. The formula for converting pixels to meters was used (Infantono et al., 2014) as written in Equation (1).

(1)

The notation d(m) represented the actual distance in meters, while d(px) signified the distance on the image in pixels. The terms resolution and scale were explained in the previous paragraph. Rocket fire moved from the right of the image to the left, showing that the rocket fire was heading towards 12.00 and the source of the shot was in line with the X axis. When the coordinates of the object point of rocket explosion image were detected below the X-axis, it proposed that the position of the point was between 6.00 and 12.00. Conversely, when the coordinates of the point were above the X-axis, the possible position was between 12.00 and 06.00. Furthermore, positioning the camera on the ground required a scaling system, therefore, the coordinates of real shooting range matched the representation of the captured image coordinates on the computer screen. This method initialized the view of the camera when observing the shooting range.

Figure 4(a) showed scaling of the camera perspective and point of view to the shooting range, while (b) showed the projection concept of shooting range using a video digital camera. The 3D-view geometry method used ratio-aspect, as determined by the following equation (2).

(2)

After AD and CD were obtained (based on the field conditions such as camera, shooting range radius, etc), the angle was calculated by the following equation (3). Due to the angle

, being divided into two new angles

was calculated using following equation (3) where

=

.

(3)

(4)

C= position of camera, H’C = perspective line of camera, H’A= exposed below X-axis, H’B= exposed above X-axis, and AB= diameter of shooting range). The diameter of the shooting range was represented as AB. AD which was the altitude of the camera position on the ground. CD was the horizontal distance of the camera to point A outside the shooting range area. Furthermore, AB was later projected making the angle to be divided into two same angles and A’B equaled to AB. Angle

was later calculated by the following equation (5), (6), and (7), where

(5)

(6)

(7)

The distance AH was obtained by AB = AH + BH. Consequently, the comparison of the shooting range perspective before and after projection was shown in equations (8) and (9) for the coordinates above and below X-axis, respectively.

(8)

(9)

(a) (b)

Figure 4 (a) Scaling camera perspective of view to the shooting range and (b) Projection concept of shooting range from observing camera

The 3D-view geometry computation was activated based on the previous step. The following equation (10) explained the transformation process of rotating the view of the Z-axis in proposed 3D-view geometry technique. The process aimed to create similar perception between camera perspective and computer screen. As a result, the view of the shooting range on the computer screen when it was initialized as previously described.

(10)

Implementation of 3D-view geometry method in the computer system was shown in Figure 5a. This Figure showed the perspective of the camera from the sensor tower. Furthermore, Figure 5b showed the perspective of the camera from center-top of the shooting range. The same perception between observing camera and computer screen was later achieved. This process enabled visualization of the shooting range on the computer screen when it was earlier initialized as described.

(a) (b)

Figure 5 Transforming process from (a) ellipse to (b) circle.

The reference used to determine the position and distance of the reference was based on the stakes installed at the coordinate position at 12 o'clock, 3 o'clock, 6 o'clock, 9 o'clock, and the center point of the target, referring to the degree of heading the direction of the shot.

The five points were marked with a 3-meter high stake with different colors to make it easier to determine the coordinates of the selected pixels on the monitor screen. The four points of the clock position had the same distance from the center of the target and were expressed as radii.

The target area was divided into 10 circular areas according to the known radius values. To obtain the accuracy of the impact point position, the ellipse was divided into four quadrants by iterating trigonometric calculations from the position of the clock coordinates in each quadrant, namely 12-Center-03, 03-Center-06, 06-Center-09, and 09-Center-12.

3.1. Results of Implementing TAGA Method

Implementation of the proposed technique used the following experimental setup. The experiments performed in this research used Python and XAMPP. The stages in delivering the results were Opening the Application and Executing Steps.

To execute WSS by using two kinds of video sources, there were local video from the computer or live streaming using IP camera. After selecting the video source from the PC, SAVE was clicked and the initial video that was selected was saved in database, ready to be executed for the next steps by clicking START VIDEO button.

3.1.1 Start calibration by converting RGB to Gray and Starts Calibrating

Start of calibration by converting RGB to Gray, the result was shown below in Figure 6a. The next step was to select the points in the position of 12-03-06-09-center clockwise, according to the real markers on the target area (the bottom of flags). The image coordinates were shown in each field of 12, 03, 06, 09, and center automatically (Figure 6b). After that, the Save button was clicked and data was saved in database (Figure 6c)

(a)

(b)

Figure 6 (a) Presentation of gray level of image from video and the selecting points in target area, (b) description the marker positions of TAGA, and (c) saving the selected coordinates

3.1.2. Selecting the boundary

of the target area as an Ellipse

After completing the step, the ‘area’ button was clicked to select boundary on the target area as an ellipse, as shown in Figure 7a. ‘C’ key was pressed, and the selected image appeared. ‘C’ key was pressed for the second time, and later returned to the main software GUI (Figure 7b).

Figure 7 (a) Results of selecting the boundary of the target area and (b) Image result of

the selected area as Region of Interest

3.1.3. Click the

Clear In Hot

button

After the Pilot received

clearance from the Range Safety Officer (RSO) to fire, the Clear In Hot

button was clicked

to start the main program which detected bomb/rocket

shots and the impact on the ground. After the Clear In Hot button was pressed, the

background process with black color appeared in the main panel, later image

processing was performed, namely background subtraction. Following the command

of RSO to Clear In Hot, a few seconds later the pilot conducted the formation

of a rocket launch or dropping a bomb from a fighter plane. At this stage, the

software waited for a rocket or bomb to hit and impact the target area.

3.1.4.

Click the STOP & RESET button

After the Initial Impact appeared, STOP & RESET button was clicked. Following the previous step, a notification appeared about stopping video streaming. Call Sign in COMMAND panel was selected (such as alpha) later STOP & ANALYSIS button was clicked to show the detection results, which were sent to the database. The visual integration software appeared in real-time (as shown in Figure 8).

Figure 8 Presentation of impact posisition and trajectory sequences

To view the results and compare with others, SHOW RESULT

button was clicked as

shown in Figure 8.

Subsequently, SHOW button was clicked to see the results in image

form. The result showed movement of bomb/rocket towards the impact point,

obtained through image processing using TAGA georeferenced method. The complete

outcome was

shown in a video,

accessible via the URL https://qr1.be/MG5D.

3.2. Discussion

The

implementation of TAGA method showed both similarities and differences compared

to previous explorations (AWSS, 2024), (Infantano and Wahyudi, 2014), and (Infantono et al., 2014). TAGA method and these

explorations produced information on the position of the impact point of

rocket/bomb on the target and its distance from the center of the target.

However, different from previous findings, TAGA method successfully showed the

trajectory of rocket/bomb just before impact. Furthermore, the 3D-Mixed Reality research (Wahyudi and Infantono, 2017) built on the 3D-View Geometry exploration by Infantono, et al. (2014) which did not use a calibration system for accurate

initial data from the cloud. Further research was needed to enable the

system to

visualize the process and results of shots at speed moving toward real-time. A significant advantage

of TAGA method over previous methods was the ability to automatically generate

bomb and rocket trajectories after identifying the explosion point.

The success of obtaining information on the impact point of rocket/bomb was shown by the process of transforming the ellipse into a circle by placing 5 important coordinate points. These points included 12 o'clock, 3 o'clock, 6 o'clock 9 o'clock, and the midpoint of the target. When the target area contained the information of the five points in a 3D pattern, it was transformed into 2D. Information on the shape of rocket/bomb trajectory was obtained through image processing using TAGA method. The comparison between researches of weapon scoring system as shown in Table 1.

Table

1 Comparison between weapon scoring systems

|

No |

Parameters |

Researches | |||

|

3D-View Geometry 2014 (Infantono et al., 2014) |

3D-Mixed Reality 2017 (Wahyudi & Infantono, 2017) |

This Research (TAGA) |

| ||

|

1 |

Object |

Rocket |

Rocket |

Bomb |

|

|

2 |

Weapon Impact Position |

Yes |

Yes |

Yes |

|

|

3 |

Distance of Impact

from center target |

Yes |

Yes |

Yes |

|

|

4 |

Bomb Trajectory |

No |

No |

Yes |

|

|

5 |

Rocket Trajectory |

Yes |

Yes |

Yes |

|

|

6 |

Type of trajectory |

Almost Real Time |

Prediction |

Real-time |

|

TAGA

method also eliminated dependence on the infrastructure of the assessment tower

which was placed in various locations in a portable manner. Consequently, the

ability of pilot improved, specifically in training for shooting from several

positions as no restriction in shooting from a certain heading.

Based

on the previous results and discussion, a conclusion was made that TAGA method

was successfully implemented. In addition, TAGA method effectively showed the

following results, namely (1) the coordinates of the impact point, (2)

position of the impact point in the circle representing the clock at the actual

radius area, (3) shape of rocket/bomb trajectory, and (4) angle of fire.

In conclusion, TAGA was introduced, which included 12-03-06-09 elliptical calibration for monitoring and evaluating air-to-ground shooting exercises using high-speed weapons, in military training. The algorithm successfully determined the position of bomb/rocket explosion point and its distance from the center point and the angle of rocket. Furthermore, TAGA ensured the accuracy of the shape of bomb/rocket trajectory to the impact point according to geo-coordinates, considering variations in shot headings. It also facilitated the evaluation of the capabilities of various participants, including the pilot, aircraft preparation and weapons team, avionics system, and the quality of air weapons as well as bombs/rockets. Moreover, TAGA contributed to accelerating the realization of NCW by swiftly analyzing accurate shooting processes and results, enabling rapid communication to Commander and all training components regardless of the location. In future, TAGA could be included in the cockpit of fighter aircraft or attacker helicopter. It should be acknowledged that the combination of the algorithm could be improved using Mixed Reality system. Subsequent research could focus on the final program with the aim of realizing Network-Centric Warfare, which required an all-joined evaluation system. Finally, consolidating all TAGA algorithm into single compact using Mixed Reality coding system had the capacity to make the process more comfortable.

The authors are grateful for the support of Lecturer Dissertation Research from the Ministry of Education, Culture, Research and Technology of the Republic of Indonesia with contract numbers 018/E5/PG.02.00.PL/2023. The authors are also grateful for the support from the Department of Electrical Engineering and Information Technology (DTETI) Faculty of Engineering, Universitas Gadjah Mada, along with the promoter team who have provided the opportunity and guidance in making this report manuscript.

| Filename | Description |

|---|---|

| R2-EECE-5886-20250324093200.pdf | --- |

Air Weapons Scoring Systems (AWSS) 2024, Sydor Technologies, viewed 30 May 2024, (https://sydortechnologies.com/ballistic-testing-products/scoring-systems/awss-air-weapons-scoring-systems/)

Bang, J, Kim, D & Eom, H 2012, ‘Motion object and regional detection method using block-based background difference video frames’, In: 2012 IEEE 18th International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), pp. 350–357, https://doi.org/10.1109/RTCSA.2012.58

Bel, T 2024, ‘Weapon scoring systems – Techbel’, viewed 30 May 2024, (http://www.tech-bel.com/products-services/weapon-scoring-systems/)

Berawi, MA 2020, ‘Managing artificial intelligence technology for added value’, International Journal of Technology, vol. 11, no. 1, pp. 1-4, https://doi.org/10.14716/ijtech.v11i1.3889

Berawi, MA 2021, ‘Innovative technology for post-pandemic economic recovery’, International Journal of Technology, vol. 12, no. 1, pp. 1-4, https://doi.org/10.14716/ijtech.v12i1.4691

Bhatti, MT, Ghazi, MR, Butt, RA, Javed, MA, Hayat, F & Shahid, A 2021, ‘Weapon detection in real-time CCTV videos using deep learning’, IEEE Access, vol. 9, pp. 34366–34382, https://doi.org/10.1109/ACCESS.2021.3059170

Cao, Y & McDonald, J 2009, ‘Viewpoint invariant features from single images using 3D geometry’, In: 2009 Workshop on Applications of Computer Vision (WACV), pp. 1–6, https://doi.org/10.1109/WACV.2009.5403061.

Chouireb, F & Sehairi, K 2023, ‘Implementation of motion detection methods on embedded systems: A performance comparison’, International Journal of Technology, vol. 14, no. 3, pp. 510-521 https://ijtech.eng.ui.ac.id/article/view/5950.

Faraji, MR, Qi, X & Jensen, A 2016, ‘Computer vision-based orthorectification and georeferencing of aerial image sets’, Journal of Applied Remote Sensing, vol. 10, no. 3, https://doi.org/10.1117/1.JRS.10.036027

Ghosh, A, Subudhi, BN & Ghosh, S 2012, ‘Object detection from videos captured by moving camera by fuzzy edge incorporated Markov random field and local histogram matching’, IEEE Transactions on Circuits and Systems for Video Technology, vol. 22, no. 8, pp. 1127-1135, https://doi.org/10.1109/TCSVT.2012.2190476.

Hoguro, M, Inoue, Y, Umezaki, T & Setta, T 2010, ‘Moving object detection using strip frame images’, Electrical Engineering in Japan, vol. 172, no. 4, pp. 38–47, https://doi.org/10.1002/eej.21095

Høvring, P 2020, ‘Weapon scoring | Nordic Radar Solutions’, viewed 30 May 2024, (https://www.nordicradarsolutions.com/weapon-scoring/)

Infantano, A & Wahyudi, AK 2014, ‘A novel system to display position of explosion, shot angle, and trajectory of the rocket firing by using markerless augmented reality: ARoket: Improving safety and quality of exercise anytime, anywhere, and real time’, In: 2014 International Conference on Electrical Engineering and Computer Science (ICEECS), pp. 197-202, https://doi.org/10.1109/ICEECS.2014.7045245

Infantono, A, Adji, TB & Nugroho, HA 2014, ‘Modeling of the 3D-view geometry based motion detection system for determining trajectory and angle of the unguided fighter aircraft-rocket’, In: WIT Transactions on Information and Communication Technologies, pp. 163–175, https://doi.org/10.2495/Intelsys130161

Infantono, A, Ferdiana, R & Hartanto, R 2023, ‘Computer vision for determining trajectory and impact position of fighter-aircraft bombing’, In: 2023 3rd International Conference on Electronic and Electrical Engineering and Intelligent System (ICE3IS), pp. 98–103, https://doi.org/10.1109/ICE3IS59323.2023.10335201

Infantono, A, Ferdiana, R & Hartanto, R 2024, ‘Area target calibration in weapon scoring system using computer vision to support Unmanned Combat Aerial Vehicle’, In: AIP Conference Proceedings, vol. 3047, p. 060004 https://doi.org/10.1063/5.0193738

Khalid, S, Waqar, A, Tahir, HUA, Edo, OC & Tenebe IT 2023, ‘Weapon detection system for surveillance and security’, In: 2023 International Conference on IT Innovation and Knowledge Discovery (ITIKD), pp. 1-7, https://doi.org/10.1109/ITIKD56332.2023.10099733.

Lábr, M & Hagara, L 2019, ‘Using open source on multiparametric measuring system of shooting’, In: 2019 International Conference on Military Technologies (ICMT), pp. 1-6, https://doi.org/10.1109/MILTECHS.2019.8870093.

Leboucher, C, Talbi, E, Jourdan, L & Dhaenens, C 2013, ‘A two-step optimisation method for dynamic weapon target assignment problem’, In: Recent Advances on Meta-Heuristics and Their Application to Real Scenarios, InTech, https://doi.org/10.5772/53606

Lee, M, Shin, MK, Moon, I-C, & Choi, HL 2021, ‘A target assignment and path planning framework for multi-USV defensive pursuit’, In: 2021 21st International Conference on Control, Automation and Systems (ICCAS), pp. 921–924, https://doi.org/10.23919/ICCAS52745.2021.9649928.

Liebelt, J & Schmid, C 2010, ‘Multi-view object class detection with a 3D geometric model’, In: 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1688–1695, https://doi.org/10.1109/CVPR.2010.5539836

Meggitt Defense Systems 2024, Weapon Scoring Systems, Parker Meggitt Defense Systems Division, viewed 30 May 2024, (https://meggittdefense.com/weapon-scoring-systems/)

Nan, W, Lincheng, S, Hongfu, L, Jing, C & Tianjiang, H 2013, ‘Robust optimization of aircraft weapon delivery trajectory using probability collectives and meta-modeling’, Chinese Journal of Aeronautics, vol. 26, no. 2, pp. 423-434, https://doi.org/10.1016/j.cja.2013.02.020.

Piedimonte, P & Ullo, SL 2018, ‘Applicability of the mixed reality to maintenance and training processes of C4I systems in Italian Air Force’, In: 2018 5th IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), pp. 559–564, https://doi.org/10.1109/MetroAeroSpace.2018.8453612

Ramon, AO & Barba Guaman, L 2021, ‘Detection of weapons using Efficient Net and Yolo v3’, In: 2021 IEEE Latin American Conference on Computational Intelligence (LA-CCI), pp. 1-6, https://doi.org/10.1109/LA-CCI48322.2021.9769779

Rehman, A & Fahad, LG 2022, ‘Real-time detection of knives and firearms using deep learning’, In: 2022 24th International Multitopic Conference (INMIC), pp. 1-6, https://doi.org/10.1109/INMIC56986.2022.9972915

Super BullsEye II, n.d, Super BullsEye II [PDF Document] fdocuments.in, viewed 20 February 2022, https://fdocuments.in/document/super-bullseye-ii.html

Talarico, MK, Morelli, F, Yang, J, Chaudhari, A & Onate, JA 2023, ‘Estimating marksmanship performance during walking while maintaining weapon aim’, Applied Ergonomics, vol. 113, p. 104096 https://doi.org/10.1016/j.apergo.2023.104096

Tamboli, S, Jagadale, K, Mandavkar, S, Katkade, N & Ruprah, TS 2023, ‘A comparative analysis of weapons detection using various deep learning techniques’, In: 2023 7th International Conference on Trends in Electronics and Informatics (ICOEI), pp. 1141-1147, https://doi.org/10.1109/ICOEI56765.2023.10125710

Wahyudi, AK & Infantono, A 2017, ‘A novel system to visualize aerial weapon scoring system (AWSS) using 3D mixed reality’, In: 2017 5th International Conference on Cyber and IT Service Management (CITSM), pp. 1-5, https://doi.org/10.1109/CITSM.2017.8089284

Wang, H, Heron, S, Moreland, J, & Lages, M 2012, ‘A Bayesian approach to the aperture problem of 3D motion perception’, in 2012 International Conference on 3D Imaging (IC3D), pp. 1-8, https://doi.org/10.1109/IC3D.2012.6615116

Wei, N & Ying, W 2010, ‘Study of automatic scoring system for simulation training’, In: 2010 3rd International Conforence on Advanced Computer Theory and Engineering(1CA CTE), pp. 403-406, https://doi.org/10.1109/ICACTE.2010.5578990

Xin, W, Dengchao, F, Jie, Z, & Fangfang, Y 2014, ‘Research on explosion points location of remote target-scoring systems based on McWiLL and RS-485 networks’, In: 2014 Sixth International Conference on Intelligent Human-Machine Systems and Cybernetics, pp. 360–363, https://doi.org/10.1109/IHMSC.2014.188

Yeddula, N & Reddy, BE 2022, ‘Effective deep learning technique for weapon detection in CCTV footage’, In: 2022 IEEE 2nd International Conference on Mobile Networks and Wireless Communications (ICMNWC), pp. 1-6, https://doi.org/10.1109/ICMNWC56175.2022.10031724

Yong, CY, Sudirman, R & Chew, KM 2011, ‘Motion detection and analysis with four different detectors’, In: 2011 Third International Conference on Computational Intelligence, Modelling and Simulation (CIMSiM), pp. 46–50, https://doi.org/10.1109/CIMSim.2011.18

Zeng, G, Gong, G & Li, N 2021, ‘Combat system-of-systems simulation scenario generation approach based on semantic matching’, Xi Tong Gong Cheng Yu Dian Zi Ji Shu/Systems Engineering and Electronics, vol. 43, no. 8, pp. 2154–2162, https://doi.org/10.12305/j.issn.1001-506X.2021.08.17.

Zhao, Y & Zhu, S-C 2013, ‘Scene parsing by integrating function, geometry and appearance models’, In: 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3119–3126, https://doi.org/10.1109/CVPR.2013.401