Malaysian Vanity License Plate Recognition Using Convolutional Neural Network

Corresponding email: lim.sin.liang@mmu.edu.my

Published at : 03 Nov 2022

Volume : IJtech

Vol 13, No 6 (2022)

DOI : https://doi.org/10.14716/ijtech.v13i6.5868

Tan, H.H., Shahid, R., Mishra, M., Lim, S.L., 2022. Malaysian Vanity License Plate Recognition Using Convolutional Neural Network. International Journal of Technology. Volume 13(6), pp. 1271-1281

| Hui Hui Tan | Faculty of Engineering, Multimedia University, Persiaran Multimedia, 63100 Cyberjaya Selangor |

| Rehan Shahid | Tapway Sdn Bhd, T3-13A-12 Pusat Danganan Icon Ciy, No 1B, Jalan SS8/39 SS8, 47300 Petaling Jaya, Selangor |

| Manish Mishra | Tapway Sdn Bhd, T3-13A-12 Pusat Danganan Icon Ciy, No 1B, Jalan SS8/39 SS8, 47300 Petaling Jaya, Selangor |

| Sin Liang Lim | Faculty of Engineering, Multimedia University, Persiaran Multimedia, 63100 Cyberjaya Selangor |

Convolutional Neural Network (CNN) is used to

train a Malaysian vanity license plate recognition model to recognize vanity

license plate available in Malaysia. A transfer learning method is applied in

this project to train the model. The type of transfer learning used is

finetuning. A modified pretrained ResNet18 network architecture is used to

train the Malaysian vanity license plate recognition model. Some

hyperparameters such as batch size, learning rate, step size, gamma and

momentum are set before training. The optimizer used in this project is SGD

(Stochastic Gradient Descent). The available Malaysian vanity license plate images

provided by Tapway Sdn Bhd consist of 3 types of Malaysian vanity license

plates, which are MALAYSIA, PUTRAJAYA and NORMAL LP (known as Normal License

plate). All the images are randomly split into training set (70 % of the total

images), validation set (20 % of the total images), and testing set (10 % of

the total images) for training. After that, the images are cropped, normalized

and transformed into tensors for training. The training is carried out for 70

epochs. Both models trained from original and modified pretrained ResNet18

network architectures are compared and discussed. The accuracy for both models

of Malaysian vanity license plate recognition models is 92%. Both training

models using the original ResNet18 and modified ResNet18 network architecture

approach can be used to train the Malaysian vanity license plate recognition

model and obtain similar results.

Convolutional neural network; Malaysian vanity license plate recognition; Resnet18

CNN network architecture is chosen to be used in this project

because of its in-built convolutional layer which decreases the high

dimensionality of images with no loss of information (Lang, 2021). Moreover,

CNN perform better in character recognition compared to traditional character

recognition methods (Elhadi et al., 2019)

such as Template Matching Kashyap et al., 2018)

and Histogram Equalization (Pangestu et al., 2017).

Transfer learning method is applied in this project to train the

Malaysian vanity license plate recognition model. The type of transfer learning

used is finetuning. In finetuning, a pretrained network is selected, and the

whole model is retrained to update all the model’s parameters according to the

dataset provided (Inkawhich, 2022).

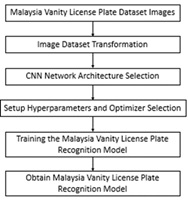

An original pretrained Resnet18 network architecture and a modified pretrained Resnet18 network are discussed and used to train the Malaysian vanity license plate recognition model. The number of output classification classes for both models are changed to match the number of Malaysian vanity license plate types available in this project so that the models can recognize the available Malaysian vanity license plate in this project. The training and dataset setup for the model are discussed. The design flow for this project is shown in Figure 1.

Figure 1 Design Flow

Firstly,

a dataset of Malaysian vanity license plate images is obtained, and then some transformation

is performed on the images to make them suitable for training. Next, a CNN

network architecture is selected for training the Malaysian vanity license

plate recognition model. After that, the network architecture hyperparameters

are configured and an optimizer is selected for training. Furthermore, the

classification model training is performed. Then, the Malaysian vanity license

plate recognition model is obtained.

2.1. Modified ResNet18

An original ResNet18 pretrained network architecture is selected to be modified for training the Malaysian vanity license plate recognition model in this project. The ResNet18 is pretrained on the ImageNet dataset that has 1000 categories (Resnet.Py, 2022). A nonlinear activation function and a dropout function have been added to the fully connected layer of the ResNet18 pretrained network. Figure 2 shows a diagram of the modified ResNet18 network architecture. The diagram is constructed by referring to (He et al., 2016) from their 34-layer residual network architecture diagram for ImageNet.

Figure 2 Modified

ResNet18 Network Architecture

Nonlinear

activation functions can allow the network to learn more complex data (Alzubaidi et al., 2021). ReLU (Rectified Linear

Activation Function) is one of the nonlinear activation functions. ReLU is

frequently used in CNN. In addition, the advantage of ReLU over the other

functions is it consumes lower computational load.

Dropout is a commonly used method for

generalization (Alzubaidi et al., 2021). Neurons are randomly dropped in each training

epoch. Therefore, the model is forced to learn different independent features

and the feature selection power is allocated equally to all the neurons. The

dropped neuron will not involve in forward-propagation or back-propagation

during training. However, the full-scale network is used to carry out a

prediction in the testing process.

2.2.

Optimizer and Hyperparameters

The optimizer used in this project is

SGD (Stochastic Gradient Descent). SGD is a gradient descent algorithm that

allows the parameters of the model to be updated on every training sample (Alzubaidi et al., 2021). This algorithm is

faster and more memory effective compared to BGD (Batch Gradient Descent) for a

big-sized training dataset.

There are several

hyperparameters being set before training the Malaysian vanity license plate

recognition model, such as batch size, learning rate, step size, gamma, and momentum.

The learning rate is

the parameter update step size (Alzubaidi et al., 2021) and it

is set to 0.005. Momentum is a method that improves the training speed and

accuracy by totalling up the calculated gradient at the previous step (Alzubaidi et al., 2021). The momentum is set

to 0.9 for the training (Bushaev, 2017). A

learning rate scheduler called lr_scheduler.StepLR is used. It decays the

learning rate of each parameter group by gamma every step_size epochs. Step

size is the period of learning rate decay and it is set to 10. Gamma is the

multiplicative factor of learning rate decay and it is set to 0.7 for the

training.

Batch size indicates

the number of training samples used in an epoch (Murphy,

2017). Some training experiments have been conducted using small batch

sizes such as 4, 8, 16, and 32 to get a suitable batch size value. After

conducting the experiments, the number of batch size chosen in training is 32

because the loss plot of the training using the batch size of 32 gives the

least fluctuations in training loss over epochs. A very small batch size value

is not suitable to be used in training as it may cause fluctuations in training

loss over epochs (Hameed, 2021).

Figure 3 Loss plot obtained

with: (a) Batch Size of 4; (b) Batch Size of 8; (c) Batch

Size of 16; and (d) Batch Size of 32

Table 1 Summary

of optimizer and hyperparameters

|

Optimizer |

Learning Rate |

Step Size |

Gamma |

Momentum |

Batch Size |

|

SGD |

0.005 |

10 |

0.7 |

0.9 |

32 |

2.3.

Training

Google Colab is a web IDE for python

programming, and it has a free version for everyone to use it. The free version

of Google Colab is used in this project to do the coding and training. Pytorch

framework is used to do the training, applying, and modifying the pretrained

ResNet18 network architecture. The original ResNet18 and modified ResNet18

network architecture will be used to train the Malaysian vanity license plate

recognition model. The training for both models is carried out for 70 epochs.

The optimizer and hyperparameters will be configured for the training.

Repetitive training

of 10 times or more is performed on the same training setup for both original

and modified ResNet18 pretrained network architecture to obtain the best model

based on the results after training.

2.4. Dataset Setup

In this project, three types of

Malaysian vanity license plates have been selected to be used in training the

Malaysian vanity license plates recognition model and they include MALAYSIA,

PUTRAJAYA, and NORMAL LP (known as Normal License plate). The vanity license

plate dataset images are supplied by Tapway Sdn Bhd for training the Malaysian

vanity license plate recognition model in this project. A total of 119 images

is used as the vanity license plate dataset images. There are a total of 38

images for vanity license plate type MALAYSIA, a total of 21 images for vanity

license plate type PUTRAJAYA and a total of 60 images for normal license

plates. There are 44 clear normal license plate images and 16 blur normal

license plate images within the normal license plate images.

All the images are

then randomly split into training set (70 % of the total images), validation

set (20 % of the total images), and testing set (10 % of the total images) for

training. The summary of the Malaysian vanity license plate image dataset

distribution is shown in Table 2.

Table 2 Summary

of Malaysia vanity license plate image dataset distribution

After splitting the

images into training set, validation set and testing set, all the images

undergo transformation. Firstly, the images are center cropped to become a size

of 224*224 because the Resnet18 pretrained network architecture accepts the input

size of 224*224. Figure 4 (a) shows a batch of example images from the training

dataset that has been center cropped. Next, the images are then transformed into tensors. After that, the

images are normalized before training so that learning can speed up and leads

to faster convergence (Stöttner, 2019).

Figure 4 (b) shows a

batch of example images from the training dataset that has been center cropped

and normalized.

Figure 4 A batch of example

images from the training dataset that have been: (a) Center Cropped; and (b) Center Cropped and Normalized

After running 70 epochs to train the Malaysian vanity license plate

recognition model using both the original ResNet18 and modified ResNet18

networks, the loss plots of both models are obtained. The testing dataset is

used to obtain the confusion matrix and classification reports of both models.

The loss plot, confusion matrix and classification report are obtained to view

the performance of the models. The training dataset accuracies for both the

models are 100%.

3.1. Loss Plot

The

loss plot of training Malaysian vanity license plate recognition model using

original pretrained ResNet18 network architecture is obtained and shown in

Figure 5 (a). The blue line represents the training loss

plotting in which it decreases and then reaches a point of stability smoothly.

The orange line represents the validation loss plotting and it fluctuates at

the beginning of the training and then decreases until it reaches a point of

stability smoothly. The gap between both losses is small after the 20th epoch.

The obtained loss plot is an optimal fitting loss plot.

The

loss plot of training Malaysian vanity license plate recognition model using

modified pretrained ResNet18 network architecture is obtained and shown in

Figure 5(b). The training loss plotting decreases and then reaches a point of

stability smoothly. The validation loss plotting fluctuates at the beginning of

the training and then decreases until it reaches a point of stability smoothly.

The gap between both losses is small after the 25th epoch. The obtained loss

plot is an optimal fitting loss plot. Optimal fitting is identified in Figure 5(b) when the training loss plot reduces until it reaches a point of stability.

In addition, the validation loss plot reduces until it reaches a point of

stability and has a tiny gap with the training loss plot.

|

|

|

|

|

Figure 5 Loss plot of the trained model using: (a) Original pretrained ResNet18 network architecture; and (b) Modified pretrained ResNet18 network architecture

The

gap between the training loss plot and validation loss plot after they reach a

point of stability is smaller for the loss plot of training Malaysian vanity

license plate recognition model using modified pretrained ResNet18 network

architecture compared to the loss plot of training Malaysian vanity license

plate recognition model using original pretrained ResNet18 network

architecture.

3.2. Confusion Matrix

The Malaysian vanity license

plate recognition model is trained using three different Malaysian vanity

license plates datasets which are MALAYSIA, PUTRAJAYA, and NORMAL LP (known as

Normal License plate), so a 3-class classification confusion matrix has been

obtained where the classes represent the different Malaysian vanity license

plates type. The 3-class classification confusion matrix of the model can be

obtained by using the confusion matrix function from sklearn library (Scikit-learn, 2022a). The 3-class classification

confusion matrix of the trained Malaysian vanity license plate recognition

model using both network architectures is shown in Figure 6.

|

|

|

|

|

Figure 6 Confusion Matrix of the trained model using: (a) Original pretrained ResNet18; and (b) Modified pretrained ResNet18

The

TP, TN, FP, and FN values for all the classes for the trained Malaysian vanity

license plate recognition model using original pretrained ResNet18 network

architecture are summarized in Table 3.

Table 3 TP, TN, FP, and FN values of all the classes

of the trained model using original pretrained ResNet18 network architecture

|

Malaysia Vanity License Plate |

TP |

TN |

FP |

FN |

|

MALAYSIA |

3 |

8 |

0 |

1 |

|

NORMAL LP |

6 |

6 |

0 |

0 |

|

PUTRAJAYA |

2 |

9 |

1 |

0 |

The TP, TN, FP, and FN values for all the classes for the

trained Malaysian vanity license plate recognition model using modified

pretrained ResNet18 network architecture are summarized in Table 4. The obtained Confusion

Matrix for trained Malaysian vanity license plate recognition model using

original pretrained ResNet18 network architecture and modified pretrained

ResNet18 network architecture are the same. The TP, TN, FP, and FN values of all

the classes for both of the models are the same.

Table 4 TP, TN, FP, and FN values of all

the classes of the trained model using modified pretrained ResNet18 network

architecture

|

Malaysia Vanity License Plate |

TP |

TN |

FP |

FN |

|

Malaysia |

3 |

8 |

0 |

1 |

|

Normal Lp |

6 |

6 |

0 |

0 |

|

Putrajaya |

2 |

9 |

1 |

0 |

3.3. Classification Report

Classification report

consists of precision, recall, f1-score, and support for all the classes. The

classification report also has accuracy, macro average, and weight average of

the trained Malaysian vanity license plate recognition model. Classification

report of the model can be obtained by using classification report function

from sklearn library (Scikit-learn, 2022b). The

testing dataset is used in the classification report function to obtain the

classification report for the model.

Precision computes the

positive class that is accurately predicted by all predicted classes in a

positive class (Alzubaidi et al., 2021). The mathematical representation of precision

is in Equation 1.

Support indicates the

number of testing images for a class inside the testing dataset.

Accuracy computes the

ratio of accurately predicted classes to the total number of samples

evaluated (Alzubaidi et al., 2021). The mathematical representation of accuracy is in Equation 4.

The macro average can be

obtained by averaging the unweighted mean per class (Scikit-learn, 2022a). In addition, the weighted average is

obtained by averaging the support-weighted mean per class.

The classification report of the trained

Malaysian vanity license plate recognition model using original pretrained ResNet18 network architecture and modified pretrained ResNet18

network architecture is obtained by using the classification report

function in sklearn library. The classification report is shown in Figure 7. The obtained Classification Report for

trained Malaysian vanity license plate recognition model using original

pretrained ResNet18 network architecture and modified pretrained ResNet18

network architecture is the same. The accuracy for both models is the same, which is 92%.

Table 5

Classification Report of the trained model using: (a) Original pretrained

ResNet18; and (b) Modified pretrained ResNet18

|

|

precision |

recall |

f1-score |

support |

|

MALAYSIA |

1.00 |

0.75 |

0.86 |

4 |

|

NORMAL LP |

1.00 |

1.00 |

1.00 |

6 |

|

PUTRAJAYA |

0.67 |

1.00 |

0.80 |

2 |

|

|

|

|

|

|

|

accuracy |

|

|

0.92 |

12 |

|

macro avg |

0.89 |

0.92 |

0.89 |

12 |

|

weighted avg |

0.94 |

0.92 |

0.92 |

12 |

(a)

|

|

precision |

recall |

f1-score |

support |

|

MALAYSIA |

1.00 |

0.75 |

0.86 |

4 |

|

NORMAL LP |

1.00 |

1.00 |

1.00 |

6 |

|

PUTRAJAYA |

0.67 |

1.00 |

0.80 |

2 |

|

|

|

|

|

|

|

accuracy |

|

|

0.92 |

12 |

|

macro avg |

0.89 |

0.92 |

0.89 |

12 |

|

weighted avg |

0.94 |

0.92 |

0.92 |

12 |

A Malaysian

vanity license plate recognition model is developed in this project. The model

is part of the Tapway Sdn Bhd’s Automatic Number Plate Recognition (ANPR)

system. Transfer learning method is

applied in this project to train the Malaysian vanity license plate recognition

model. An original ResNet18 and modified ResNet18 network architecture are used

to train the Malaysian vanity license plate recognition model with the

available Malaysian vanity license plate images provided by Tapway Sdn Bhd. The

modification done in the modified ResNet18 network architecture is such as

adding a nonlinear activation function called ReLU and a dropout layer in fully

connected layer of the pretrained network. In addition, the output feature

number of the linear layer inside the fully connected layer for both original

ResNet18 and modified ResNet18 network architecture has changed to match the

number of available Malaysian vanity license plates. This is so that the model

can recognize car license plate images to the matching available Malaysian

vanity license plate type. Due to the limitation of the small dataset provided,

we are unable to observe a significant performance between the original model

with the modified model. However, we can still observe that the performance of

the modified model is better than the original model based on the training loss

plot and validation loss plot.

The authors are deeply grateful to Tapway Sdn Bhd for

proposing the title, providing sample images, and giving the guidance throughout

the project.

Abdalkafor, A.S., 2017. Designing Offline Arabic

Handwritten Isolated Character Recognition System Using Artificial Neural

Network Approach. International Journal of Technology, Volume 8(3), pp.

528–538

Alzubaidi, L., Zhang, J., Humaidi, A.J., Al?Dujaili, A., Duan, Y.,

Al?Shamma, O., Santamaría, J., Fadhel, M.A., Al?Amidie, M., Farhan, L., 2021.

Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications,

Future Directions. Journal of Big Data, Volume 8(1), pp. 1–74

Brownlee, J., 2019. What is Deep Learning? Available online at https://machinelearningmastery.com/what-is-deep-learning/,

Accessed on August 27, 2021

Bushaev, 2017. Stochastic Gradient Descent with Momentum. Towards

Data Science

Dilmegani, C., 2020. Transfer Learning in 2021: What it is &

How it works. Aimultiple.com. Available online at https://research.aimultiple.com/transfer-learning/,

Accessed on August 27, 2021

Elhadi, Y., Abdalshakour, O., Babiker,

S., 2019. Arabic-Numbers Recognition System for Car Plates. In: International

Conference on Computer, Control, Electrical, and Electronics Engineering

Hameed, 2021. Re: Why is My Training Loss Fluctuating? Available

online at https://www.researchgate.net/post/Why_is_my_training_loss_fluctuating/6090fac66f51cc540f284cc5/citation/download,

Accessed on March 5, 2022

He, K., Zhang, X., Ren, S., Sun, J., 2016. Deep Residual Learning for Image Recognition.

In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Inkawhich, N., 2022. Finetuning Torchvision Models—PyTorch Tutorials

1.2.0 Documentation. Pytorch.org. Available online at https://pytorch.org/tutorials/beginner/finetuning_torchvision_models_tutorial.html,

Accesssed on March 23, 2022

Kashyap, A., Suresh, B., Patil, A., Sharma, S.,

Jaiswal, A., 2018. Automatic Number Plate Recognition. In: International

Conference on Advances in Computing, Communication Control and Networking (ICACCCN),

pp. 838–843

Lang, 2021. Using Convolutional Neural Network for Image

Classification. Towards Data Science. Available online at https://towardsdatascience.com/using-convolutional-neural-network-for-image-classification-5997bfd0ede4,

Accessed on March 14, 2022

Murphy, A., 2017. Batch Size (Machine Learning). Radiopaedia.org.

Available online at https://radiopaedia.org/articles/batch-size-machine-learning,

Accessed on March 5, 2022

Pangestu, P., Gunawan, D., Hansun, S., 2017.

Histogram Equalization Implementation in the Preprocessing Phase on Optical

Character Recognition. International Journal of Technology. Volume 8(5),

pp. 947–956

Resnet.Py, 2022. At Main-Pytorch/Vision. Available online at https://github.com/

pytorch/vision/blob/main/torchvision/models/resnet.py

Scikit-learn, 2022a. 3.3. Metrics And Scoring: Quantifying The

Quality Of Predictions. Scikit-Learn

Scikit-learn, 2022b. Sklearn Metrics Classification Report. Scikit-learn.

Shaheen, F., Verma, B., Asafuddoula, Md., 2016. Impact of Automatic

Feature Extraction in Deep Learning Architecture. In: International

Conference on Digital Image Computing: Techniques and Applications (DICTA), pp.

1–8

Stöttner, 2019. Why Data should be Normalized before Training a Neural

Network. Towards Data Science. Available online at https://towardsdatascience.com/why-data-should-be-normalized-before-training-a-neural-networkc626b7f66c7d#:~:text=Amo

ng%20the%20best%20practices%20for,and%20leads%20to%20faster%20convergence,

Accessed on March 3, 2022

Zuna, H.T., Hadiwardoyo, S.P., Rahadian, H., 2016.

Developing a Model of Toll Road Service Quality using an Artificial Neural

Network Approach. International Journal of Technology, Volume 7(4), pp.

562–570