Enhancing Students’ Online Learning Experiences with Artificial Intelligence (AI): The MERLIN Project

Corresponding email: neo.mai@mmu.edu.my

Published at : 19 Oct 2022

Volume : IJtech

Vol 13, No 5 (2022)

DOI : https://doi.org/10.14716/ijtech.v13i5.5843

Neo, M., Lee, C.P., Tan, H.Y., Neo, T.K., Tan, Y.X., Mahendru, N., Ismat, Z., 2022. Enhancing Students’ Online Learning Experiences with Artificial Intelligence (AI): The MERLIN Project. International Journal of Technology. Volume 13(5), pp. 1023-1034

| Mai Neo | Faculty of Creative Multimedia, Multimedia University, 63100 Cyberjaya, Selangor, Malaysia |

| Chin Poo Lee | Faculty of Information Science & Technology, Multimedia University, Jalan Ayer Keroh Lama, 75450 Bukit Beruang, Melaka, Malaysia |

| Heidi Yeen-Ju Tan | Faculty of Creative Multimedia, Multimedia University, 63100 Cyberjaya, Selangor, Malaysia |

| Tse Kian Neo | Faculty of Creative Multimedia, Multimedia University, 63100 Cyberjaya, Selangor, Malaysia |

| Yong Xuan Tan | Faculty of Creative Multimedia, Multimedia University, 63100 Cyberjaya, Selangor, Malaysia |

| Nazi Mahendru | Faculty of Creative Multimedia, Multimedia University, 63100 Cyberjaya, Selangor, Malaysia |

| Zahra Ismat | Faculty of Creative Multimedia, Multimedia University, 63100 Cyberjaya, Selangor, Malaysia |

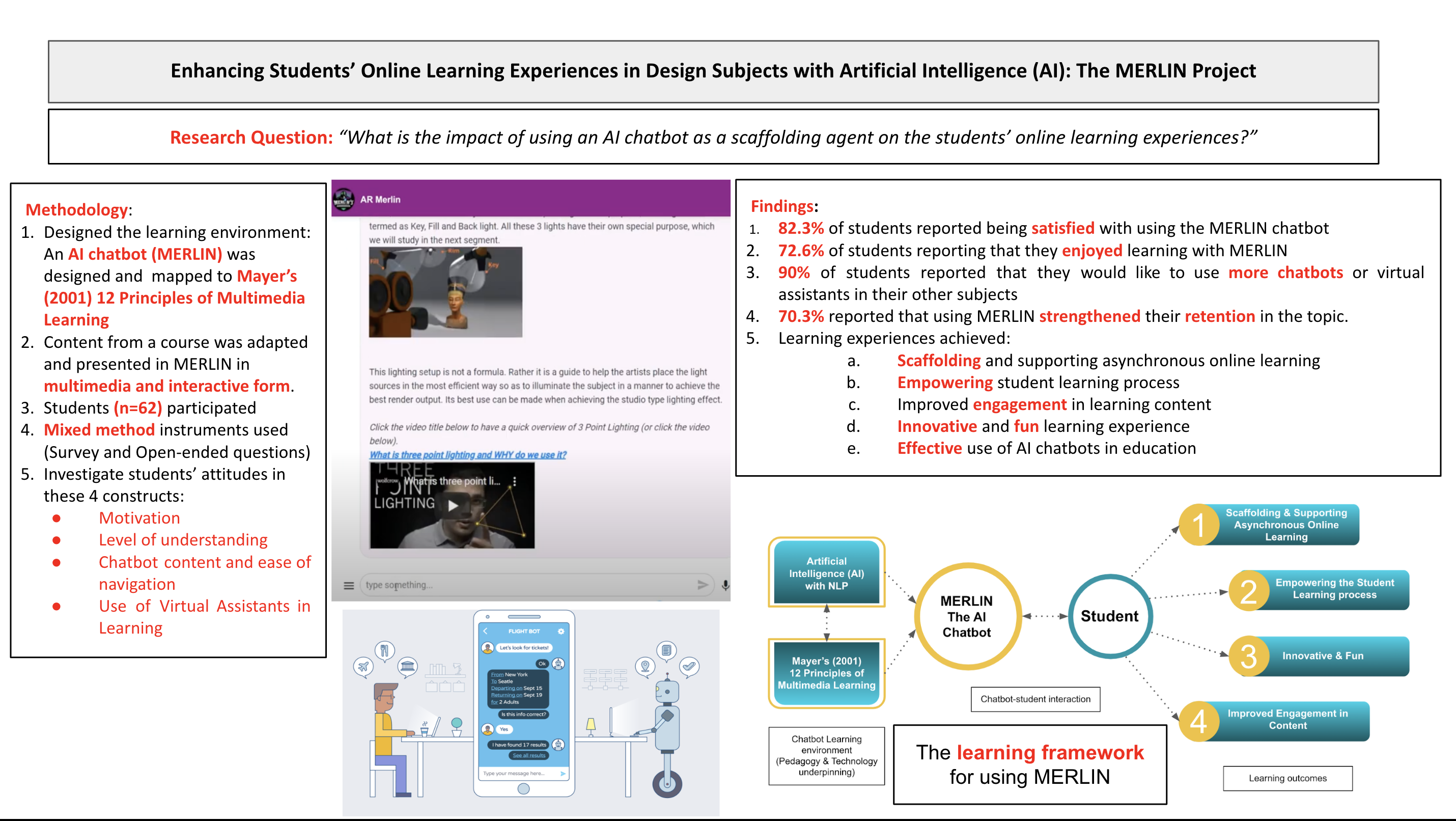

The COVID-19 pandemic led to all institutions of

education having to transition to fully online learning almost immediately.

However, research showed that online learning still lacked adequate

interactions with students. This is even more problematic when students are

online learning on their own, when adequate online scaffolding activities are

absent. This study investigated the impact of chatbots as a scaffolding agent

to assist student learning during their independent online learning times. A

total of 62 Diploma level students participated in this mixed method research

study and presented with a multimedia-based AI chatbot named MERLIN. Data was

collected on their attitudes towards using it. Results showed that students

were motivated to learn more using MERLIN, improved their learning, and wanted

more chatbots in their other courses. These findings have important

implications for using AI chatbots as a scaffolding and instructional tool in

21st-century learning environments.

Artificial intelligence; Chatbot; Learner experience; Multimedia learning; Scaffold

The move towards online learning has been accelerated in many institutions of higher education, which were previously predominantly face-to-face. The use of technology in the classroom is growing as educators seek to innovate the teaching and learning process with emerging technologies and ICT (Berawi, 2020a; Godin & Terekhova, 2021). These technologies include blended learning web tools such as Kahoots, Padlet, blogs, simulations, and social media. For many universities, integrating technology into the classrooms and harnessing the potential of blended learning tools resulted in a smoother transition to online learning environments when the COVID-19 pandemic hit. In Malaysia, the COVID 19 global health crisis led to a total lockdown in the country, resulting in schools and universities making emergency transitions to fully online classes. While some institutions could make the shift with little to no effort, for many, the transition to online learning was a drastic change that left them unprepared and challenged (Azlan et al., 2020). While many universities could rely on the support of their Learning Management Systems (LMS) and blended learning tools, the online learning environment still lacked adequate interaction with students. Much of this was because these online learning environments were deficient in their instructional design and as such, need to be redesigned with appropriate support and activities that allow students to better interact with their learning materials (Liguori & Winkler, 2020). This is even more problematic when students are learning independently online, without the presence and support of their lecturer (Keshavarz, 2020). During these times, the need for interaction and a proper support system is higher than when they are in their online classes. Doyumgaç et al. (2021) state that it is unrealistic to depend on students to regulate their learning and engagement processes when studying independently online and call for appropriate methodologies and measures to support them when they are learning asynchronously online. In addition, in the absence of the instructor, learning activities that encourage active participation and allow students to engage in the learning content before and after their online classes need to be designed and implemented (Allo, 2020). In other words, students need to have some form of scaffolding activities to enable them to better engage with their learning content.

According

to Zaretsky

(2021), scaffolding is an important instructional strategy as

it assists students in building upon their current level of knowledge. Proper scaffolding enables students to better

understand new concepts, develop new skills and move from what they know now to

what they need to know. Scaffolding is underpinned by socio-constructivism

which suggests that the learning process is enhanced when more experienced

peers or tutors assist students. Research studies have shown that scaffolding

has been an effective instructional strategy to engage learners and improve

learning outcomes (Belland, 2017; Doo et al., 2020). Martha et al. (2019) suggests

that motivation must be included in its design when providing scaffolding

support through pedagogical agents. Scaffolding can include fishbowl and

think-aloud activities, chunking of information into digestible micro modules

and delivered incrementally, visual aids, providing examples, and modeling the

process for the learners. When applied to the current educational landscape,

these scaffolds can be further developed by utilizing the potential of

technology, making them available to students anytime and anywhere. The 2020

Horizon Report (Brown et al., 2020) posits that learning environments can

benefit greatly through the incorporation of mixed reality technologies such as

Augmented Reality, Artificial Intelligence, Virtual Reality, and adaptive learning

tools. Research has shown that the use of these emerging technologies has had

positive effects on the learning process, and has the potential to innovate

online learning environments in an impactful manner. Using AI is beneficial in

decision-making and can simplifying complex processes (Berawi, 2020b; Siswanto et

al., 2022). Research by Gaglo et al. (2021) showed

that Artificial Intelligence (AI) can contribute significantly to the

educational field, and suggests using chatbots as instructional tools to assist

students when they are learning independently online.

A chatbot was presented by Clarizia et al. (2018) that served as an e-Tutor to support the e-learning system. The framework mapped the learning object metadata instances into the ontology. Subsequently, the learners’ intention was associated with the ontology by adopting Latent Dirichlet Allocation. Neto and Fernandes (2019) proposed a chatbot for online collaborative learning with an Academically Productive Talk (APT) structure. The APT proposed the movements to encourage discussions and social interactions among the learners. Apart from that, the Conversational Analysis was incorporated into the chatbot to support the teachers in monitoring online collaborative activities. A Bengali chatbot called “Doly” was put forth in Kowsher et al. (2019). The Bengali chatbot enabled the learners to type their questions in the Bengali language. To answer the learner’s question, the chatbot leveraged a search algorithm to obtain the matching results from the corpus. Subsequently, the final answer to the question was selected by the Naïve Bayesian algorithm. Winkler et al. (2020) devised a web-based chatbot, known as “Sara”, that was integrated into the online video lectures. Sara intervened in the learning process when the learners were watching the videos by asking relevant questions and providing detailed explanations. The voice-based and text-based scaffolds in Sara helped learners to retain the information better and transfer knowledge. A chatbot to teach programming in primary education, referred to as the “Prof. Watson” was presented by Yeves-Martínez and Pérez-Marín (2019). The IBM Watson Assistant service empowered Prof. Watson. The dialog flow in the chatbot was designed based on the methodology of teaching programming in primary education with the use of metaphors.

While there is some evidence that AI chatbots are being used in institutions of higher education (Gaglo et al., 2021), they are still very much in the corporate and medical sectors in Malaysia (Lee et al., 2020), providing customer care services. To date, there is very little evidence in research of chatbots being used in Malaysia for educational purposes, as this technology is still in its infancy in the country. As such, the role and impact of AI chatbots as learning scaffolds for Malaysian educational institutions need to be further investigated. Therefore, this research study investigates the role and impact of using an AI chatbot as an instructional tool and scaffold for students in an online learning environment, specifically when they are learning independently and without the lecturer’s presence. This research was conducted to answer the research question, “What is the impact of using an AI chatbot as a scaffolding agent on the students’ online learning experiences?”, and to present a learning framework for the effective use of AI chatbots as scaffolding agents in the virtual learning environments. This research was guided by the current issue prevalent in the Faculty of Creative Multimedia that design students, who do a lot of their learning online and outside of their classes, lack lecturer support and interactions during these times. Learning is thus truncated until they meet their lecturer again during class time. During this time, retention of the information is lowered, and knowledge is unsustained. The development of the MERLIN chatbot would bridge that gap by providing assistance and support during independent online learning times. However, since this would be a new technology for students to use, their readiness and acceptance of the chatbot for these scaffolding purposes would need to be assessed, in addition to their learning experiences in using a chatbot during their independent online learning times (Salloum et al., 2019).

2.1. Learning with AI – The MERLIN

Project

The AI

chatbot, MERLIN, was developed as part of a program to use mixed reality

technologies in classrooms called MERLIN’s Playground conducted in Multimedia

University, Malaysia. The MERLIN Project focused on designing and developing a

chatbot as a virtual scaffold and learning assistant for students learning

independently online.

In

MERLIN chatbot, natural language processing (NLP) is used to understand the

meaning of the student input in text description form. When students interact

with the chatbot, the chatbot will process their input sentences through

different NLP models before responding to students. There are two main things

that the chatbot needs to extract from the sentences which are entity and

intent. The entity represents the object in the sentences, while the intent

represents the purpose of the sentences. It is more challenging to capture the

sentence's intent since it might not have a clear purpose. The entities to be

identified include human names, positive words, dates, and more. Since not all

entity types can be detected by the pre-trained models in MERLIN chatbot,

training a customized model is required to capture specific types of entities.

For instance, when the student enters his/her name, the pre-trained human name

detector model will capture the human name in the sentence. Another example is

when the student enters “I want to do a

quiz", the customized entity model will detect the word "quiz" and trigger the quiz

module. Besides the pre-trained entity

model, the MERLIN chatbot allows the developers to train their customized

entity models. Specifically, the customizable entity model is a Conditional

Random Field (CRF) model that is trained to detect the entity in different

sentence variations. Training a customizable entity model requires a set of

training samples. For instance, to detect the entity “3-point lighting example”, the model needs to collect different

sentences related to “3-point lighting

example” as the training samples, such as “Show me some three-point lighting examples” and “what is 3-point lighting example”. Since

entity model training does not require labeled samples, all sentences do not

map to a label.

As for the intent models, several customized intent models were created to capture the desired intent from the input sentences. For example, when the student key in “I want to know about 3-point lighting example”, a customized intent model needs to understand the purpose of this sentence where it requests the examples of 3-point lighting and returns the correct contents to the student. For intent models, a Bernoulli Naive Bayes (BNB) model (Metsis et al., 2006; Manning et al., 2008) was trained to recognize the purpose of the sentences related to "Faraday’s law application". In intent models, many labelled training samples are required, which can be grouped as positive and negative samples. For example, "I want to know about the application of Faraday’s Law" and "Get application of Faraday’s Law" belong to positive samples. In contrast, "The importance of Faraday’s Law" and "The Faraday’s Law explanation" belong to negative samples. The model needs to learn and capture the purpose of the training sentences that are truly related to the "Application of Faraday’s Law" although the negative samples also contain the phrase "Faraday’s Law" With this model, the MERLIN chatbot will return the relevant information to the students based on their queries. Figure 1 shows some examples of the content returned to the students.

Figure 1 MERLIN returning the relevant

information to students’ queries

The

learning content in the chatbot was customized to the syllabus from a design

course taught in the Faculty of Creative Multimedia, Multimedia University,

called “Lighting in 3D modeling”. The

aim was to create a conversational AI chatbot that could scaffold students when

they were learning online without the presence of their lecturer. The interaction

between the chatbot and the learner was underpinned by Natural Language

Processing (NLP) features, using the Conditional Random Field (CRF) entity

model and the Bernoulli Naive Bayes (BNB) intent model, where conversations

simulated intelligent human language interactions. In addition, the content in

the chatbot was redesigned using multimedia elements such as videos,

narrations, and animations. The final learning content that the chatbot would

return, would be media-rich, comprehensive and visually appealing. This would

provide students a multi-sensory learning experience with a chatbot within a

human-like interactive learning environment.

In particular, the chatbot was designed to provide content that was

multimedia-based learning materials to differentiate it from the conventional

chatbots used in non-educational environments. A self-efficacy quiz was also

provided to the learner to assess themselves and to reflect on their scores, as

learners’ self-efficacy has been shown to impact their attitudes towards using

the technology (Abdullah & Ward, 2016). Based

on their scores, they would then have the opportunity to go back to MERLIN to

further study the areas they were weaker in or to feel more confident in their

newly acquired knowledge of the topic.

2.2.

Methodology

This

study employed a convergent mixed method research design to collect and analyze

both qualitative and quantitative data solicited from the students in one

phase. A 22-item survey questionnaire was administered to students in the

Faculty of Creative Multimedia to gauge their attitudes towards using this AI

chatbot. These students were taking their Diploma in Creative Multimedia,

enrolled in a design course, and learning about “Lighting in 3D modeling”. Research has shown that many e-learning

applications have been developed to create more technologically-supported

learning environments (Asvial et al., 2021).

However, there is still a lack of confidence and sound pedagogical support for

these environments, and therefore, still a need to assess their effectiveness

and the readiness of the learners to use them (Salloum et al., 2019). The

Technology Acceptance Model (TAM) by Davis (1989) has been widely used to gauge user

preparedness over a wide range of technology (Tao et al., 2022; Yeo et

al., 2022). In it, the model suggests that users’ intention to use

a particular technology is predicated upon their perception of its usefulness

(PU) and its ease of use (PEOU), which then results in their evaluated

attitudes (ATU). The model also suggests

that PU and PEOU are the student learning experiences that are cognitive while

ATU is their positive or negative emotional assessment of the technology, which

will impact their decision to use that technology in the future. Content,

navigation, self-efficacy, enjoyment, media features, and instructional quality

can also have an impact on the students’ Intention to Use (IU) the technology (Abdullah & Ward, 2016;

Tao et al., 2022).

In this

study, since using an AI chatbot would be new for them, their acceptance and

perceptions of using it was investigated. Therefore, Davis (1989) Technology

Acceptance Model (TAM) model was chosen.

The study’s survey adapted the model to investigate students’ Intention

to Use (IU) chatbots in their learning process. Data was collected from

students to gauge their perceptions of the MERLIN chatbot’s Perceived

Usefulness (PU) and Perceived Ease of Use (PEOU), which consequently would

affect their Attitudes Towards Usage (ATU) of the chatbot, and, ultimately,

their preparedness to use chatbots in the future. In particular, the survey sought to

investigate these key determinants through these 4 constructs:

1)

Level of understanding (6 survey

items mapped to Perceived Usefulness (PU))

2)

Chatbot content and ease of

navigation (6 survey items mapped to Perceived Ease of Use (PEOU))

3)

Motivation (6 survey items mapped to

Attitudes Towards Usage (ATU))

4)

Use of Virtual Assistants in Learning

(4 survey items mapped to Intention To Use (IU))

The

survey was a 5-point Likert scale questionnaire, ranging from 5 (Strongly

Agree), 4 (Agree), 3 (Undecided), 2 (Disagree), and 1 (Strongly Disagree), and

was conducted voluntarily. Students were given a short briefing on the study

and were informed that the survey would not affect their course grades. They

were then given a consent form to fill up and the option to not participate. A

total of 62 students agreed to be part of the study, with 18 students opting

not to participate. Students who agreed to participate were directed to the

chatbot’s link and given 30 minutes to explore MERLIN before completing the

questionnaire. In addition, and as part of the convergent mixed method research

design, qualitative data was collected from open-ended questions soliciting

student comments. These comments were integral to gauge students’ perceptions

towards using MERLIN as a scaffolding tool in their independent online learning

process. They were also analyzed and compared with the survey’s results to

support this study’s findings.

The

study’s findings were analyzed with SPSS v27 to answer for research question, “What

is the impact of using an AI chatbot as a scaffolding agent on the students’

online learning experiences?”. Findings

of the entire survey are presented in Table 2, where survey item means (M) are

shown, as well as the percentage of positive responses, p, (i.e., students who scored 4 (Agree) and 5

(Strongly Agree) on the survey). Reliability

analysis was performed on the survey and yielded a Cronbach Alpha of 0.93,

confirming that the survey results were reliable. To better understand the

findings on each of the constructs, the survey is further categorized into 1)

Level of Understanding, 2) Chatbot Content and Ease of Navigation, 3)

Motivation, and 4) Use of Virtual Learning Assistants in Learning. Tables 1-4 present the items for each of the

constructs items from the questionnaire, and their supporting student comments.

Table 1 Survey findings for Level of

Understanding

|

|

Item Name |

Mean (M) |

% responses |

|

1. |

The

Merlin Assistant cleared doubts |

3.98 |

69.4 |

|

2. |

The

additional info was quite helpful. |

4.18 |

79.03 |

|

3. |

The

inclusion of a quiz in the MERLIN Virtual Assistant further helped in

assessing the authenticity of my understanding of the topic. |

3.89 |

72.10 |

|

4. |

The

MERLIN virtual assistant tool enhanced my understanding of this topic in an

interesting & engaging manner. |

3.84 |

69.36 |

|

5. |

The

MERLIN Virtual Assistant helped me strengthen my retention of the topic. |

3.97 |

70.3 |

|

6, |

I

found Merlin Virtual Learning Assistant informative and engaging. |

3.97 |

77.05 |

As shown in Table 3, 69.4% of

students also reported that MERLIN was able to clarify and clear any doubts

that they had for certain questions they had in mind (Item 1, M = 3.98), and in

doing so, strengthened their retention in the topic (Item 5, M = 3.97, p =

70.3%). 72.1% of the students found that taking the quiz allowed them to assess

better their level of understanding of the topic (Item 3, M = 3.89), and 79% of

them reported that the additional information given was very helpful (Item 2, M

= 4.18). 69% of students reported that Merlin enhanced their understanding of

the topics (Item 4, M = 3.84) and 77% of them found Merlin to be informative

and engaging (Item 6, M = 3.97). The data were also supported by student

comments that MERLIN was able to enhance their understanding of the topic, as

students commented that, “[MERLIN] Makes

revising more accessible and easy”, “I can simply test my understanding … after

I learn something new”, “[MERLIN] Clear up any confusion I have regarding

certain topics”, and, “Merlin doesn't

confuse me when it shows the explanation of the topic, it keeps it simple and

easy to understand making it easier and faster to digest what the topic is

about”. They also pointed out that using MERLIN helped them to save time

and effort of searching for the information themselves on Youtube and trying to

understand them on their own, and having the quiz features enabled them to

self-evaluate their level of knowledge.

Table 2 Survey findings Chatbot Content and

Ease of Navigation

|

|

Item Name |

Mean (M) |

% responses |

|

1. |

I was

able to navigate through MERLIN easily from start to finish. |

3.90 |

66 |

|

2. |

The

content in the MERLIN Virtual Assistant was well-organized and followed a

suitable sequence for understanding a topic. |

4.03 |

83.34 |

|

3. |

The language

and the content of the MERLIN Virtual Assistant was easily understandable. |

4.31 |

79 |

|

4. |

The

inclusion of web links and visual aids, such as videos & images, in the

MERLIN Virtual Assistant further helped in clarity of the topic. |

4.24 |

76 |

|

5. |

I had

no problem going through MERLIN on my own. |

4.02 |

70.49 |

|

6. |

It was

easy for me to become skillful at using the Merlin Virtual Assistant. |

3.90 |

66.13 |

Table 2 shows the majority of

students reported that navigating through MERLIN was not difficult, with 66% of

students stating that they were able to navigate easily within the chatbot

(Item 1, M = 3.90), and 70% of them being able to navigate MERLIN easily on

their own (Item 5, M = 4.02). In addition, 79% of students found the language

and content in MERLIN easily understood (Item 3, M= 4.31). 76% of students

reported that the inclusion of media-rich elements such as videos and images

better-explained concepts (Item 4, M = 4.24), and 66% of them reported that

they could easily become skilful at using MERLIN (Item 6, M = 3.90).

Student

comments also showed positive support for content and navigation in MERLIN, as

many stated that they found the content easy to understand and to navigate in

MERLIN, commenting that, “Merlin provided

videos, images and even audio explanations which is easy to understand and

remember”, “I like how clear every explanation and definition is to

understand”, and, “It's easier to

remember when you can answer quizzes while learning”. They also commented

that MERLIN helped them during times when their lecturer was not available and

they were online learning on their own. This implies that MERLIN could scaffold

and support their online learning during out-of-class times. Students also

appreciated the presentation of content in media-rich form, commenting that the

inclusion of video, images, and audio explanations contributed to their

retention of the topic. Similarly, the self-efficacy quiz provided in MERLIN

enabled them to retain the information better, as “It’s easier to remember when you can answer quizzes while learning”.

They also commented on MERLIN’s ability to “...understand me easily when I ask

a certain question”, also indicating that MERLIN was able to provide

scaffolding to them.

From the

findings in Table 3, it can be seen that 82.3% of students reported being

satisfied with using the MERLIN chatbot (Item 6, M = 4.24), and 72.6% of

students reported that they enjoyed learning with MERLIN (Item 1, M = 4.0), and

61% had fun using it (Item 2, M = 3.90). Students also stated that MERLIN

enhanced their understanding of the topic, enabling them to feel more confident

in the knowledge that they gained from the chatbot (Item 4, M = 3.81, p = 64%),

and, consequently, more engaged (Item 5, M = 3.95, p = 69.4%). This led to 69%

of them commenting that they were more motivated to learn about the topic

(Item, 3, M = 3.76). The study’s findings also showed that student comments

that were solicited further strengthened the survey results (Note: comments are

verbatim). In terms of motivation, students also stated that they were very

motivated by using MERLIN, commenting that it was “A fun way to learn a topic”, “I enjoyed learning along with Merlin”,

“It'll keep me engaged, and it makes learning easier and fun”, and, “I find this MERLIN an interesting a.i to

interact with”. These comments suggest a positive attitude from the

students towards using MERLIN in their studies, as they commented, “I've never thought a virtual assistant

[MERLIN] can be this good!”

Table 3 Survey findings for Motivation

|

|

Item Name |

Mean (M) |

% responses |

|

1. |

I

enjoyed learning with the MERLIN Virtual Assistant module. |

4.00 |

72.6 |

|

2. |

I had

fun learning with MERLIN. |

3.90 |

61.29 |

|

3. |

Thanks

to MERLIN, I feel more motivated to learn further about this topic. |

3.76 |

60.65 |

|

4. |

I am

more confident now with the knowledge that I have gained from the MERLIN

Virtual Assistant |

3.81 |

64 |

|

5. |

With

MERLIN's help, I feel more engaged with this topic. |

3.95 |

69.4 |

|

6. |

Overall

I am satisfied in using the MERLIN Virtual Assistant for my learning of this

topic. |

4.24 |

82.3 |

Table 4 Survey findings for Use of Virtual

Assistants in Learning

|

|

Item Name |

Mean (M) |

% responses |

|

1. |

I

would like to learn more with such virtual assistants for my other subjects. |

4.38 |

90.33 |

|

2. |

I

would like to use virtual learning assistants more often for my coursework. |

4.19 |

74.19 |

|

3. |

I

believe it is a good idea to use virtual learning assistants for extra

knowledge and in depth understanding outside of the class. |

4.24 |

85.24 |

|

4. |

I

found these assistant learning tools very suitable for online learning

environments |

4.34 |

87.1 |

Findings for this construct in Table

4 yielded high positive means for all items, indicating that students’

Intention to Use (IU) MERLIN was high. Over 90% of students reported that they

would like to use more chatbots or virtual assistants in their other subjects

(Item 1, M = 4.38), making the highest item scored in the table, and over 87%

of students stated that they found these tools very suitable for online

learning environments (Item 4, M = 4.34). 85% of students found having chatbots

was beneficial to acquiring additional in-depth of knowledge outside of the

classroom (Item 3, M = 4.24), with 74% of students reporting that they wanted

to use virtual learning assistants more often in their coursework (Item 2, M =

4.19) With regards to their intention to use chatbots in their learning

process, many reported that, “It saves me

time as a student”, “This type of virtual assistants can help me a lot when I

don't have anyone to refer to for learning purpose”, “It will help when im

doing assignments on unfamiliar topics during late night sessions”, and, “It can guide me while I trying to find a

certain topic for me to learn”. Their comments indicated that MERLIN was an

effective instructional tool to “...help

students to study much better due to the difficulties of learning face to face

with the lecturers” and to enable

more active and supported learning to take place during independent online

learning times.

Figure 2

The learning framework for learning with the MERLIN

chatbot

Overall,

the study’s findings show highly favorable student attitudes towards using

MERLIN to learn when they were learning independently online. In answering the

research question, “What is the impact of using an AI chatbot as a scaffolding agent on

the students’ online learning experiences?”, the MERLIN chatbot was found to be capable of creating and

supporting positive online learning experiences for students in the study.

These experiences include:

1.

Scaffolding and supporting asynchronous online learning. The AI chatbot was effective in scaffolding the student

learning process by providing educational support during students’ asynchronous

learning times. Findings showed most students had commented that MERLIN could

support them during times when there was no lecturer available and when they

had doubts during their independent online learning times. This result is in

line with research by Doyumgaç et al. (2021) on the effectiveness of using chatbots as a guide to

better online interactions with students. There are implications that a chatbot

can provide scaffolding support to students during their independent online

learning times and in line with research by Salloum

et al. (2019) and Winkler

et al. (2020), and create a more sustainable

learning experience where students are actively engaged and supported in their

learning goals, and the teacher facilitates the learning process, and the technology

becomes an integral enabler of the learning environment.

2.

Empowering student learning process. Students reported being more confident and skilful after

using MERLIN, which contributed to their overall feeling of satisfaction in

their new learning process. Learning was enhanced significantly when students

interacted with the chatbot. Multimedia was effective in enhancing the learning

in the chatbot. By providing more media-rich content, students were more likely

to involve themselves in the learning process, as evidenced by their comments

and survey findings, and in line with research by Keshavarz

(2020) and Doyumgaç

et al. (2021).

3.

Improved engagement in learning content. Results from the survey and student comments showed that

learning and knowledge acquisition was improved and retained, as MERLIN allowed

them to further clarify their doubts about the topic and made it easier for

them to retain the information. Enjoyment and engagement were reportedly high

among these students and using a chatbot to help them during independent online

learning times was novel and innovative. Furthermore, the self-efficacy quiz

was helpful to the self-evaluation of their learning progress and ultimately in

the improvement of their knowledge acquisition, thus addressed the design issue

of online learning activities (Liguori & Winkler, 2020).

4.

Effective use of AI chatbots in education. MERLIN was an effective instructional tool in motivating

students to learn more and engage with the content. Students were actively

involved in using MERLIN in their learning process, and the use of a chatbot as

a scaffold and a virtual learning assistant was very exciting and novel to

them, and many reported to wanting more of these assistants for their other

courses. The benefits of having these chatbots were invaluable to them during

these learning times, when lecturer support was unavailable. Therefore, this

showed that using chatbots during independent online learning times allows the

learning process to be further sustained until the students and lecturers meet

again, and is consistent with research from the Horizon Report 2020 that

suggests that using mixed reality technologies like AI was more effective for

21st-century student learning experiences (Brown

et al., 2020).

Thus, findings of both qualitative and quantitative data

show strong positive support for this learning environment and for the learning

framework to provide a guide to educators using chatbots in their classes.

Driven

by the issue of the lack of pedagogically sound chatbot design in scaffolding

and supporting students’ online learning, this research study investigated the

impact and role of an AI chatbot in enhancing student learning experiences and

as a scaffold during their independent online learning times. A

multimedia-based AI chatbot named MERLIN was designed with NLP features based

on the Conditional Random Field (CRF) and Bernoulli Naive Bayes (BNB) models

and presented to students learning a design course. Survey and feedback data

were collected from students, and results showed that interacting with MERLIN

resulted in higher motivation and engagement levels, increased understanding of

the topic, and the request for more AI chatbots to assist them in other

subjects. These findings strongly indicate that AI chatbots can be very

beneficial in online learning environments as scaffolds to enhance their

learning experiences.

The authors would like to thank the staff and

students of Multimedia University (MMU) for participating in the study and the

teams from MERLIN’s PLAYGROUND for their assistance in this research. This

study was funded by the Telekom Malaysia Research & Development (TMRnD) 2019

research grant (RDTC/190995).

Abdullah, F., Ward, R., 2016. Developing a

General Extended Technology Acceptance Model for E-Learning (GETAMEL) by

Analysing Commonly used External Factors. Computers

in Human Behavior, Volume 56, pp.

238–256

Allo, M.D.G., 2020. Is the Online Learning

Good in the Midst of Covid-19 Pandemic? The Case Of EFL Learners. Journal

Sinestesia, Volume 10(1), pp. 1–8

Asvial, M., Mayangsari, J., Yudistriansyah,

A., 2021. Behavioral Intention of e-Learning: A Case Study of Distance Learning

at a Junior High School in Indonesia due to the COVID-19 Pandemic. International Journal of Technology.

Volume 12(1), pp. 54–64

Azlan, A.A., Hamzah, M.R., Sern,T.J., Ayub,

S.H. Mohamad, E., 2020. Public Knowledge, Attitudes and Practices Towards

COVID-19: A Cross-Sectional Study in Malaysia. PLOS ONE, Volume 15(5), p.

e0233668

Belland, B.R., 2017. Instructional

Scaffolding in STEM Education: Strategies and efficacy evidence. Cham,

Switzerland: Springer.

Berawi, M.A., 2020a. Empowering Healthcare,

Economic, and Social Resilience during Global Pandemic Covid-19. International Journal of Technology,

Volume 11(3), pp. 436–439

Berawi, M.A., 2020b. Managing Artificial

Intelligence Technology for Added Value. International Journal of

Technology, Volume 11(1), pp. 1–4

Brown, M., McCormack, M., Reeves, J, Brook

D.C., Grajek, S, Alexander, B., Bali, M., Bulger, S., Dark, S., Engelbert, N.,

Gannon, K., Gautheir, A., Gibson, D., Gibson, R., Lundin, B., Veletsianos, G., Weber,

N., 2020. Educause Horizon Report Teaching and Learning Edition 2020.

Educause, United State

Clarizia, F., Colace, F., Lombardi, M.,

Pascale, F., Santaniello, D., 2018. Chatbot: An Education Support System for

Student. In: International Symposium on Cyberspace Safety and Security, pp.

291–302

Davis, F.D., 1989. Perceived Usefulness,

Perceived Ease of Use, And User Acceptance of Information Technology. MIS quarterly, Volume 13 (3), pp. 319–340

Doo, M.Y., Bonk, C., Heo, H., 2020. A

Meta-Analysis of Scaffolding Effects in Online Learning in Higher Education. International Review of Research in Open and

Distributed Learning, Volume 21(3),

pp. 60–80

Doyumgaç, I., Tanhan, A., Kiymaz, M.S., 2021.

Understanding the Most Important Facilitators and Barriers for Online Education

During COVID-19 Through Online Photovoice Methodology. International Journal

of Higher Education, Volume 10(1), pp. 166–190

Gaglo, K., Degboe, B.M., Kossingou, G.M.

Ouya, S., 2021. Proposal of Conversational Chatbots for Educational Remediation

in the Context of Covid-19. In: 2021 23rd International Conference on

Advanced Communication Technology (ICACT), Volume 23, pp. 354–358

Godin, V.V., Terekhova, A., 2021.

Digitalization of Education: Models and Methods. International Journal of Technology, Volume 12(7), pp. 1518–1528

Keshavarz, M.H., 2020. A Proposed Model for

Post-Pandemic Higher Education. Budapest International Research and Critics

in Linguistics and Education (BirLE) Journal, Volume 3(3), pp. 1384–1391

Kowsher, M., Tithi, F.S., Alam, M.A., Huda,

M.N., Moheuddin, M.M., Rosul, M.G., 2019. Doly: Bengali Chatbot for Bengali

Education. In: 2019 1st International Conference on Advances in Science,

Engineering and Robotics Technology (ICASERT), pp. 1–6

Lee, M.Z., Kee, D.M.H., Chan, K.Y., Liow,

C.S., Chin, K.Y., Alkandri, L.A., 2020. Improving Customer Service: A Case

Study of Genting Malaysia. Journal of the Community Development in Asia

(JCDA), Volume 3(1), pp. 44–53

Liguori, E., Winkler, C., 2020. From Offline to

Online: Challenges and Opportunities for Entrepreneurship Education Following the

Cov?d-19 Pandemic. Entrepreneurship Education and Pedagogy, Volume 3(4),

pp. 346–351

Manning, C.D., Raghavan, P., Schutze, H.,

2008. Introduction to Information Retrieval. New York: Cambridge

University Press, pp. 405–416

Martha, A.S.D., Santoso, H.B., Junus, K.,

Suhartanto, H., 2019. A Scaffolding Design for Pedagogical Agents within the

Higher-Education Context. In: Proceedings

of the 2019 11th International Conference on Education Technology and Computers,

pp. 139–143

Metsis, V., Androutsopoulos, I., Paliouras,

G., 2006. Spam Filtering with Naive Bayes-Which Naive Bayes? In: Third

Conference on Email and Anti-Spam (CEAS), Volume 17, pp. 28–69

Neto, A.J.M. Fernandes, M.A., 2019. Chatbot

and Conversational Analysis to Promote Collaborative Learning in Distance

Education. In: 2019 IEEE 19th International Conference on Advanced

Learning Technologies (ICALT), Volume 2161, pp. 324–326

Salloum, S.A., Al-Emran, M., Shaalan, K.,

Tarhini, A., 2019. Factors Affecting the E-learning Acceptance: A Case Study

from UAE. Education and Information Technologies, Volume 24(1), pp.

509–530

Siswanto, J., Suakanto, S., Andriani, M.,

Hardiyanti, M., Kusumasari, T.F., 2022. Interview Bot Development with Natural

Language Processing and Machine Learning. International

Journal of Technology. Volume 13(2), pp. 274–285

Tao, D., Fu, P., Wang, Y., Zhang, T., Qu, X.,

2022. Key Characteristics in Designing Massive Open Online Courses (MOOCs) for

User Acceptance: An Application of the Extended Technology Acceptance Model. Interactive Learning Environments, Volume

30(5), pp. 882–895

Winkler, R., Hobert, S., Salovaara, A.,

Söllner, M., Leimeister, J.M., 2020. Sara, the Lecturer: Improving Learning in

Online Education with a Scaffolding-Based Conversational Agent. In:

Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems,

pp. 1–14

Yeo, S., Rutherford, T., Campbell, T., 2022.

Understanding Elementary Mathematics Teachers’ Intention to use a Digital Game

Through the Technology Acceptance Model. Education

and Information Technologies, pp. 1–22

Yeves-Martínez, P. Pérez-Marín, D., 2019.

Prof. Watson: A Pedagogic Conversational Agent to Teach Programming in Primary

Education. In: Multidisciplinary Digital Publishing Institute

Proceedings, Volume 31(1), pp. 84–91

Zaretsky, V.K., 2021. One More Time in the

Zone of Proximal Development. Cultural-Historical Psychology, Volume

17(2), pp. 37–49