Technical Loopholes and Trends in Unmanned Aerial Vehicle Communication Systems

Published at : 17 May 2024

Volume : IJtech

Vol 15, No 3 (2024)

DOI : https://doi.org/10.14716/ijtech.v15i3.5644

Shafik, W., Kalinaki, K., Matinkhah, S.M., 2024. Technical Loopholes and Trends in Unmanned Aerial Vehicle Communication Systems. International Journal of Technology. Volume 15(3), pp. 654-664

| Wasswa Shafik | 1. Dig Connectivity Research Laboratory (DCRLab), P. O. Box. 600040, Kampala, Uganda, 2. Borderline Research Laboratory, P. O. Box. 7689, Kampala, Uganda |

| Kassim Kalinaki | 1. Department of Computer Science, Islamic University in Uganda. P. O. Box 2555, Mbale, Uganda, 2. Borderline Research Laboratory, P. O. Box. 7689, Kampala, Uganda |

| S. Mojtaba Matinkhah | Computer Engineering Department, Yazd University, University Boulevard, Safayieh-Yazd, Yazd 89195-741 Yazd Iran |

Advancements in

technology have positioned Unmanned Aerial Vehicles (UAVs) as current and

future computational paradigms. This novel artificial intelligence tool

facilitates various applications, including communication, sports, real-time

data collection in agriculture, and commercial purposes. Even though UAVs were

much known for military usage in navigating hard-to-reach areas and hostile

environments for physical human interaction. Considering UAV's application in

communications, the maximum adaption of diversity combination properties has

been used for some years ago, facing the Bit Error Rate (BER) of selective

combing, maximal ratio combining, equal gain combining, direct combining,

selective combining, BER of Rayleigh, and Ricean approach. This paper surveys

models and current UAV systems to analyze the most common technologies in UAV

diversity, combining Rayleigh and Ricean based on the diversity combination

properties. Based on the survey, the existing proposed models have not depicted

the most reliable and effective model for adopting Rayleigh or Ricean in UAV

communication systems and UAV-associated concerns.

Diversity combining; Rayleigh scheme; Ricean scheme; Unmanned aerial vehicle

Sustainable development depicted smart cities and integration of

the Internet of Things (IoT); for instance, drones that operate autonomously or

semi-autonomous are supported by artificial intelligence like machine learning

(ML) and Deep Learning (DL) has demonstrated an increase in the market arena (Berawi, 2023; Shafik et al.,

2020a). Proper

resource utilization and allocation using the existing technologies are

prevailing, like using electric motorcycles to reduce environmental pollution (Suwignjo et al., 2023) and social

benefit-cost assessments (Sarkar, Sheth, and

Ranganath, 2023). The future unmanned aerial vehicle (UAV) projection

plans boost UAV-networked communication productivity. The precise time logs in

UAV maintenance contribute to accurate data collection, enabling the

forecasting of data transmissions for grounded nodes in new city development

projects (Berawi, 2022; Shafik et al.,

2020b), despite the fact monitoring unmanned aerial vehicle flight, for

example, velocities that reduce the multiple data packet losses that are after

The collection of onsite and current information

assortment in Unmanned Aerial Vehicles-assisted Internet-of-Things networks is

a network challenge. This increases safer storage, UAV data backups, and quick

access to collectible data files, this is often done when the UAV collected

data is transferred towards the network sensors in collecting points that

collect, manipulate, retrieve, and update packets inside a specified time frame

in conscious to the enduring positive energies (Shafik

et al., 2020c). Figure 1 presents different parts of drones from

UAVs through SD, given that

Figure 1 Drone types (Hassanalian, 2016)

Energy Efficiency novelty, the machine learning model to challenge

the energy delinquent was to formulate a group of UAVs energy-resourcefully,

also accommodatingly accumulate statistics beginning from lower levels of the

sensors, though incriminating the batteries from multi haphazardly installed

arraigning positions (Shafik et al., 2020d).

The proposed proximal policy

optimization (PPO) and the convolution neural turing machine (ConvNTM) termed

as j-PPO+ConvNTM model contains a novel spatiotemporal module to better model

long-sequence spatiotemporal data and a deep reinforcement learning model

called "j-PPO", where it has the capability to make continuous (for

instance, route planning) and discrete (that is to say, either go for charging or

to collect data) action decisions simultaneously for all UAVs, moreover

to accumulate data action decisions instantaneously for all UAVs (Liu, Piao, and Tang, 2020).

In human target search and detection, several optimum procedures

are used in searching and formulating the target from the quantified

exploration area terminuses. Subsequently, in acknowledgment of the targets,

the Unmanned Aerial Vehicle could have the capacity either be cast-off to

embrace its condition so that it may also further have the audio-visual feeds

of the targets or reappearance to its base station the minute the synchronizes the predictable utilizing

GPS components or convey the GPS position to the base station (Shafik et al., 2020e).

The Markov decision process is articulated through the enterprise

of state, accomplishment interstellar, and reward functions. The deep learning

approach in specific reinforcement is inspected to accomplish the optimum

multiparty path planning and power allocation strategies (Glock and Meyer, 2022). An energy-constrained

unmanned aerial vehicle was installed to accumulate information on each sensor

network when it hovered through these sensor networks. The flight trajectory of

the Unmanned Aerial Vehicle has been enhanced to minimize the sensor network's

information age while preserving its packet drop rate. More still, the authors

have proposed and developed a learning algorithm to navigate an optimal policy.

This paper primarily presents the following contributions:

- A comprehensive literature review of state-of-the-art models related to Unmanned Aerial Vehicles (UAVs) and associated issues.

- Studies on the technical loopholes in UAV communication systems include autonomous navigation, task offloading, energy efficiency, human target search, detection, power allocation in UAV networks, resource allocation, and real-time trajectory planning.

- The survey also consolidates UAV challenges identified in existing models and highlights loopholes. This will assist various stakeholders in selecting models that align with public demand.

- The study further identifies technical trends as UAV standard technologies attain complex tasking and maneuver decisions, minimum throughput maximization, continuous communication service, fairness, a segmentation method, wireless networking, and detection.

The rest of this paper is structured as follows. In section 2, technical loopholes in the state-of-the-art UAVs are presented with simplified tables of illustrations. Section 3 holds the technical trends of UAVs. In section 4, the conclusion and present future works are presented.

Technical Loophole UAV Models

In this section, the study focuses on the most currently identified challenges that the UAV faces; considering the challenges in response to the proposed model and technical outcomes, the main interest is mainly put on the proposed model loopholes in search of better technical recommendations that researchers who intend to develop models, architectures, and frameworks to develop models are in-line with the most technical network challenges factors in specific.

Multi-UAV Navigation, a calculated and ultimately disseminated controller, leverages the chronological standard exposure path achieved by all UAVs in the mission. It also utilizes the terrestrial fairness of all carefully considered points of interest and minimizes energy consumption by keeping them within the designated area boundaries. This approach ensures national reflection, exploitation space, and compensation are managed in a prominent style. Lastly, it perfectly optimizes each UAV using deep neural networks.

Conducted extensive simulations and found the appropriate set of hyperparameters, including experience replay buffer size, number of neural units for two fully connected hidden layers of actor, critic, and their target networks, and the discount the factor for remembering the future reward (Shafik and Tufail, 2023). Real-time trajectory Planning of the UAV for aerial data assortment and the WPT to minimize bumper excess at the ground sensors and unsuccessful transmission due to lossy airborne frequencies. Contemplate net states of battery-operated levels and buffer lengths of the pulverized instruments, channel conditions, and position of the UAV (Liu et al., 2020). Deep Q-learning-based resource supervision is proposed to minimize the general statistics packet loss of the IoTs' nodes by optimally determining the IoTs' node for data assortments and energy transfer and the connected modulation arrangement of the IoTs' node; see (Li et al., 2020a; Shafik et al., 2020c; Wu et al., 2020).

2.1. UAV autonomous navigation

The assumption was that a previous policy (nonexpert assistant) might be of meager presentation available to the educational agent. The preceding policies theatres the person of decision-making as the agent in peripatetic the state area by reshaping the behavior policy used for environmental interaction. It conjointly assists the agent in achieving goals by setting dynamic learning objectives with an increasing problem. To evaluate our proposed technique, we constructed a machine for UAV navigation in large-scale complex environments and compared our algorithm with several baselines. The proposed model significantly outperforms the existing algorithms that handle distributed rewards and produces impressive navigation policies similar to those learned in an environment with dense rewards (Wang et al., 2020).

2.2. Energy efficiency

A new deep model referred to as j-PPO+ConvNTM encompasses a unique Convert-NTM to higher archetypal long-sequence spatiotemporal knowledge, and a deep reinforcement learning model referred to as "j-PPO," wherever it is potential to create continuously that is to say path forecasting and distinct that is to say additionally to pucker information or select charging action choices at the same time for all UAVs; see (Liu, Piao, and Tang, 2020).

2.3. Human Target Search and Detection

The application of developing associate in nursing autonomous closed-circuit television victimization associate with nursing UAV to spot an assumed target and objects of interest within the parcel over that it flies, such a system may be employed in rescue operations, particularly in remote areas wherever physical access is challenging. The UAV industrialized throughout this effort is accomplished by object recognition. A mounted camera is active to offer visual feedback and is associated with the nursing and aboard process; the unit runs an image recognition software package to spot the target in real time.

2.4. Power allocation in UAV networks

The machine learning-based approach is expected to get the utmost long-run network utility whereas summit with user equipment's quality of service mandate. The arithmetician call methods are developed with the look of slate, action area, and reward performance. The deep reinforcement learning approach is inspected to realize the joint optimal policy of power-driven phenomenon styles and power allocation. Because of the continual action area of the MDP model, a deeply settled policy gradient approach is bestowed. The predictable learning algorithmic program will significantly scale back the age of knowledge and packet drop rates associated with the baseline greedy algorithms, respectively.

2.5. Multi-UAV navigation

Maximizing the average temporal coverage, the score achieved by whole UAVs during a task, maximizing the geographical fairness of all thought of point-of-interests, and minimizing the overall energy consumptions, keeping them connected and not flying out of the realm border. The state, observation, auction house, and reward were designed meticulously, and each UAV was modeled using a deep neural network; for details, refer to (Liu et al., 2020).

2.6. Real-time trajectory planning

Considering system states of battery levels and buffer lengths of the ground sensors, channel conditions, and site of the UAV. An aeronautical trajectory planning optimization is formulated as an obvious incomplete MDP, where the UAV has the fractional reflection of the system conditions (Li et al., 2020b). In rehearsal, the UAV-enabled sensor system encompasses many system states and travels in the model, while the up-to-date information on the system situations is not available at the Unmanned Ariel.

2.7. Resource management

The resource management downside is insensible situations, wherever the UAV has no a-prior statistics on battery-operated levels and evidence train lengths of the nodes. Construction of the resource supervision of UAV-assisted WPT and information variety as Markoff call process, somewhere the states carry with its battery levels and evidence queue dimensions of the internet of things' nodes, frequency qualities, and locations of the UAV. A Q-Learning mainly constructed resource administration is premeditated to decrease the all-purpose data packet loss of the IoTs nodes, power transfer, and the associated modulation theme of the IoTs node (Li et al., 2020c).

2.8. Partially explainable big data

Using telecommunications and social media data, the study aimed to analyze the impact of UAV power and communication constraints on the practice and quality of service in a specific area. The objective was to determine the level of excellence and competition within the system of dimensions being examined. The Proposed greener (Guo, 2020) UAVs on the close-to-middle-percent energy saved address each quantitative quality of service and qualitative quality-of-experience difficulties (Li et al., 2020c).

2.9. UAV pursuer and evader problem

A Takagi-Sugeno-fuzzy model is precisely a constant UAV management technique for perception. In dealing with the struggle conundrum amongst UAVs, thus verbalize this downside into a pursuer-evader negative and influence the strengthening learning principally founded machine knowledge practice to resolve this (Shafik, 2023). The projected profound letter system relies on antique dispatch learning yet is ready to address some deficiencies. A deep letter network has three critical improvements: victimization neuronal networks to elucidate the letter operation, the enterprise of twofold networks, and proficiency replay (Chen et al., 2020).

2.10. Automatic recognition & secure communications

To achieve high accuracy, we tend to appraise tetrad deep neural network reproductions qualified with different restrictions for fine-tuning and allocation learning. Information extension waster was used through the schmoosing coaching to avoid overfitting. The proposed methodology contains the victimization of the SLIC model to section the plant leaves inside the top-view pictures obtained throughout the flight (Tetila et al., 2020). The authors tested our information set from accurate flight inspections in an associated end-to-end laptop vision approach (Shafik, 2024).

2.11. Path planning and trajectory design

The UAV is employed to trace users that move on to some specific methods, and the authors tend to propose a proximal policy optimization-based rule to maximize the fast total rate. The UAV is sculptural as a deep reinforcement learning agent to be expressed the technique to traffic by interrelating with the troposphere (Li et al., 2020d). On one occasion, the UAV attends users on unidentified approaches for disasters, a random coaching proximal strategy optimization rule which may obligate the pre-trained model to innovative tasks to appreciate fast organizing.

2.12. Energy-Efficiency

The initial tendency is to see the energy efficiency problem for UAVs as a secondary obstacle to optimizing performance. This is because there are different performance measures for the internet, synchronous extra-terrestrial users, and UAVs as aerial users. Investment tackles beginning deep learning; the authors have become a predisposition to network this shortcoming into a profound column learning drawback and gift a learning-powered answer that comes with the KPIs of interest inside the design of the reward performed to resolve energy efficiency maximization for aerial users whereas minimizing interference to terrestrial users (Ghavimi and Jantti, 2020) and (Shafik and Tufail 2023). Any stream understanding on the reimbursements of finance intelligent energy-efficient theme. A severe increase in energy effectiveness of aerial users relative to an intensification in their spectral effectiveness, whereas an extensive reduction in incurred interferes with the extra-terrestrial.

2.13. Multi-UAV target-finding

The enactment discontinues the matter into two separate stages of designing and management, with each stage modeled as a part of a discernible Markov call method. International redistributed designing employs a fashionable online part discernible Markov call method convergent thinker, whereas a contemporary DRL formula is employed to supply a policy for instinctive supervision. The outline is proficient in target-finding privileged a replicated indoor checked location in the interior. The attendance of mysterious obstacles, connected once protracted to real-world maneuvers, might adjust UAVs to be applied in a cumulative variety of submissions (Walker et al., 2020).

2.14. Real-time data processing

A mobile machine-like Associate in a nursing autonomous guided vehicle will interfere during this situation because the vehicle additionally desires service by the sting node; over that, the quality of service performance will decrease; as detailed in (Shafik et al., 2020d). Therefore, the study deploys an associate in the nursing pilotless aerial vehicle as a positioning node to supply service to the sting network through optimizing the flight of UAV wherever the sting network requests tasks employing deep Q-network Learning (Sakir et al., 2020). Machine learning, notably the deep Q-network rule, will increase the number of machines that may provide service. Afterward, the period information can do either the interruption happens at the sting node (Shafik et al., 2020a).

2.15. UAV navigation

Within the confrontations and these uncomplicated limitations, the study has a propensity to amalgamate the advanced DRL with the UAV navigations to complete an enormous multiple-input-multiple-output performance. To be explicit, there is a propensity to meticulously style a deep Q-network for augmenting the UAV navigation by picking the optimal policy, and so the study inclines to recommend a learning machine for the progression of the mysterious Q-networks. The unfathomable Q-network is trained so the agent can construct choices supported by the received signal strengths for navigating the UAVs with the benefit of confident Q-learning (Zhang et al., 2020).

2.16. Anti-intelligent UAV emptiness

A simple observant of the fragmentary channel government info of the latest users, the study tends to prototypical such spearhead sub-game as a partly evident Markov call method for the primary try. Obtain the optimum jam flight via the developed deep repeated Q-networks within the three-dimensional area. The authors got the optimal announcement flight via the industrialized deep Q-networks inside the two-dimensional extent. The presence of the Stackelberg equilibriums and the closed-form countenance for the Stackelberg equilibrium in a particular case obtained some sensitive remarks area unit (Gao et al., 2019).

2.17. Trajectory design and power allocation

Additionally, because of that, the utility of every UAV is set supported by the network setting and alternative UAVs' actions, the standard flight style, and power allocation negative is sculpturesque as a random game. Due to the high machine quality instigated by the recurring action area and massive state area, a multi-agent deep settled policy gradient, the methodology is projected to get the best policy for the standard flight style and power allocation issue. The proposed methodology will get the upper network utility and system capability than alternate optimizations strategy in multi-UAV grids with lower mechanism eminence (Wang et al., 2019).

Technical Trending of UAV

This section presents current UAV trends, including complex tasking and maneuver decisions, minimum throughput maximization, continuous communication service, fairness, segmentation method, wireless networking and detection, target tracking, and node positioning.

3.1. Complex tasking and maneuvering decision

An imitation-increased deep reinforcement Learning learns the underlying complementary behaviors of UGVs and UAVs from an indication dataset collected from some straightforward situations with non-optimized methods (Dong et al., 2022). Coaching exploitation ancient strategies, a phased coaching technique known as "Basic-confrontation," that relies on the concept that the masses step by step learn from easy to complicate is planned to assist in cutting back the coaching time whereas obtaining suboptimal however economical results (Hu et al., 2022). The matched short-range air combats are simulated under different target maneuver policies. The planned maneuver decision model and training technique will help the UAV achieve autonomous decision-making in air contests and establish an effective decision policy to outperform its rivals (Ben-Aissa and Ben-Letaifa, 2022).

3.2. Minimum throughput maximization

The joint remote-controlled UAV aeronautical deceitful and time resource allocation for tiniest output maximization during a multiple UAV-enabled wireless high-powered communication network. Specifically, the UAVs perform as base stations to broadcast energy signals within the downlink to charge IoT devices, whereas the Internet of Things strategies send their freelance data within the transmission by utilizing the composed energy. The established output optimizations downside that involves joint optimizations of 3-dimensional path styles and channel resource obligation with the constraint of flight speed of UAVs and transmission transmit power of IoT devices is not bulging and resolving directly (Wang et al., 2022).

3.3. Persistent communication service and fairness

A projected energy-economical and honest third-dimensional UAV programming with energy filling, wherever UAVs move around to serve users and recharge timely to refill energy (Nørskov et al., 2022). Impressed by the success of deep reinforcement learning, we tend to propose a UAV management policy supported by deep settled policy gradient UC- Deep settled Policy Gradient to deal with the mixed downside of third-dimensional quality of multiple UAVs and energy-filling programming that ensures energy-efficient and honest coverage of every user in an exceedingly massive region and maintains the continuous service using deep learning YOLO-v2 for UAV Applications in real-time (Boudjit and Ramzan, 2021).

3.4. Segmentation Method

The initial victimization of UAVs to get Multiview inaccessible sensing pictures of the forest victimizes the structure from motion formula to hypothesize the timberland thin resolution cloud and patch-based MVS formula to construct the dense purpose cloud (Mostafavi and Shafik, 2019). A targeted purpose cloud deep learning technique is planned to extract the purpose cloud of one tree (Song et al., 2022). The analysis results show that the accuracy of single-tree purpose cloud division of deep learning ways is quite ninetieth, and the accuracy is higher than ancient flat image separation and resolves cloud segmentation (Ling et al., 2022).

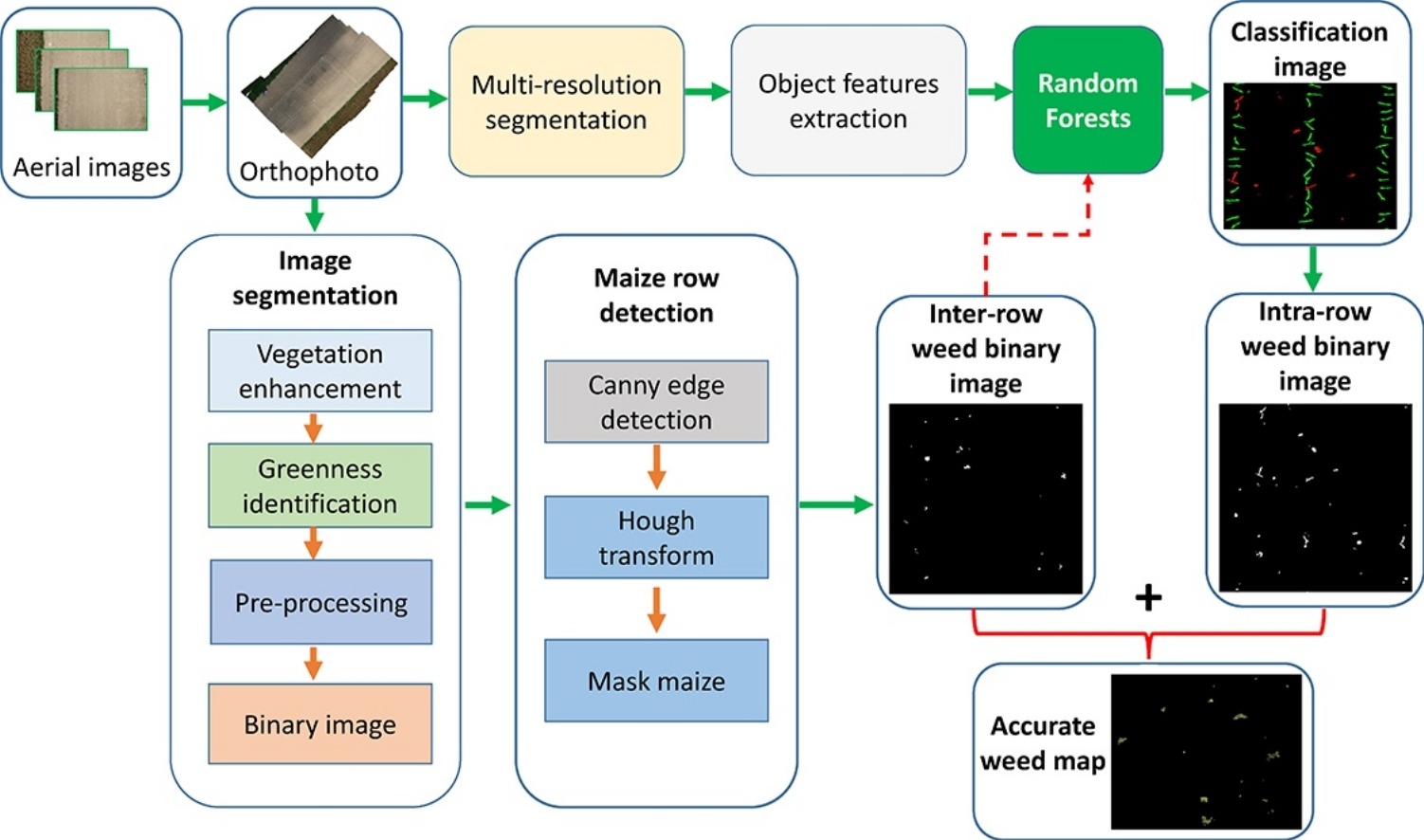

3.5. Wireless networking and detection

The performance of the planned methodology was quantitatively compared to ancient approaches, and it exhibited the most practical and sturdy result. An authentic crop row detection rate of 94.61% was obtained with an assistant degree promissory note score per crop row higher than 71% (Medaiyese et al., 2022).

In

conclusion, the field of UAV communication systems is evolving at a rapid pace,

with advancements being made in both hardware and software. While these

advancements have improved the capabilities of UAVs, they have also created

technical loopholes that malicious actors could exploit. Developers need to be

aware of these vulnerabilities and work towards securing UAV communication

systems. The trend seems to be toward developing more autonomous and

intelligent UAVs. These UAVs will be capable of performing complex tasks

without human intervention, relying on advanced communication systems to

operate in various environments. In addition, integrating UAVs with other

technologies, such as artificial intelligence and the IoT, will further enhance

their capabilities. As the use of UAVs becomes more widespread in various

industries, the need for secure and reliable communication systems will only

become more critical. Developers must work towards addressing current

vulnerabilities and designing communication systems capable of withstanding new

threats. Ultimately, the future of UAV communication systems will be shaped by

the continued evolution of technology and the ability of developers to adapt to

changing demands and challenges.

Ben-Aissa, S.,

Ben-Letaifa, A., 2022. UAV

Communications with Machine Learning: Challenges, Applications and Open

Issues. Arabian Journal for Science and Engineering. Volume 47(2),

pp. 1559–1579

Berawi, M.A., 2022. New City Development:

Creating a Better Future and Added Value. International Journal of

Technology, Volume 13(2), pp. 225–228

Berawi, M.A., 2023. Smart Cities: Accelerating

Sustainable Development Agenda. International Journal of Technology,

Volume 14(1), pp. 1–4

Boudjit, K., Ramzan, N., 2021. Human Detection

Based on Deep Learning YOLO-v2 for Real-Time UAV Applications. Journal

of Experimental & Theoretical Artificial Intelligence, Volume 34(3),

pp. 527–544

Chen, D., Wei, Y., Wang, L., Hong, C.S., Wang,

L., Han, Z., 2020. Deep Reinforcement Learning Based Strategy for Quadrotor UAV

Pursuer and Evader Problem. In: IEEE International Conference on

Communications Workshops, Volume 2020, pp. 1–6

Dong, X., He, M., Yang, X., Miao, X., 2022.

Efficient Low-Complexity Turbo-Hadamard Code in UAV Anti-Jamming

Communications. Electronics, Volume 11(7), p. 1088

Gao, N., Qin, Z., Jing, X., Ni, Q., Jin, S.,

2019. Anti-Intelligent UAV Jamming Strategy via Deep Q-Networks. IEEE

Transactions on Communications. Volume 68(1), pp. 569–581

Ghavimi, F., Jantti, R., 2020. Energy-Efficient

UAV Communications with Interference Management: Deep Learning Framework. In:

2020 IEEE Wireless Communications and Networking Conference Workshops, pp.

1–6

Glock, K., Meyer, A., 2022. Spatial Coverage In

Routing And Path Planning Problems. European Journal of Operational

Research, Volume 305(1), pp. 1–20

Guo, W., 2020. Partially Explainable Big Data

Driven Deep Reinforcement Learning for Green 5G UAV. In: IEEE International

Conference on Communications, pp. 1–7

Hassanalian,

M., 2016. Wing Shape Design and Kinematic Optimization of Bio-Inspired Nano Air

Vehicles for Hovering and Forward Flight Purposes. Doctoral Dissertation, New

Mexico State University, Mexico

Hu, J., Wang, L., Hu,

T., Guo, C., Wang, Y., 2022. Autonomous

Maneuver Decision Making of Dual-UAV Cooperative Air Combat Based on Deep

Reinforcement Learning. Electronics, Volume 11(3), p. 467

Khan, R.U., Khan, K., Albattah, W., Qamar,

A.M., 2021. Image-Based Detection Of Plant Diseases: From Classical Machine

Learning To Deep Learning Journey. Wireless Communications and Mobile

Computing, Volume 2021, pp. 1–13

Li, K., Emami, Y., Ni,

W., Tovar, E., Han, Z., 2020a. Onboard

Deep Deterministic Policy Gradients for Online Flight Resource Allocation of

UAVs. IEEE Networking Letters, Volume 2(3), pp. 106–110

Li, K., Ni, W., Tovar,

E., Guizani, M., 2020b. Deep

Reinforcement Learning for Real-Time Trajectory Planning in UAV Networks. In:

2020 International Wireless Communications and Mobile Computing, pp. 958–963

Li, K., Ni, W., Tovar,

E., Jamalipour, A., 2020c. Deep

Q-Learning based Resource Management in UAV-assisted Wireless Powered IoT

Networks. In: IEEE International Conference on Communications, pp. 1–6

Li, X., Wang, Q., Liu, J.,

Zhang, W., 2020d. Trajectory

Design and Generalization for UAV Enabled Networks: A Deep Reinforcement

Learning Approach. In: IEEE Wireless Communications and Networking

Conference, pp. 1–6

Ling, M., Cheng, Q., Peng, J., Zhao, C., Jiang,

L., 2022. Image Semantic Segmentation Method Based on Deep Learning in UAV

Aerial Remote Sensing Image. Mathematical Problems in Engineering, Volume

2022, p. 5983045

Liu, C.H., Ma, X., Gao,

X., Tang, J., 2020. Distributed

Energy-Efficient Multi-UAV Navigation for Long-Term Communication Coverage by

Deep Reinforcement Learning. IEEE Transactions on Mobile Computing,

Volume 19(6), pp. 1274–1285

Liu, C.H., Piao, C., Tang, J., 2020.

Energy-Efficient UAV Crowdsensing with Multiple Charging Stations by Deep

Learning. In: IEEE Conference on Computer Communications, Volume 2020, pp.

199–208

Medaiyese, O.O., Ezuma,

M., Lauf, A.P., Guvenc, I., 2022. Wavelet

Transform Analytics for RF-Based UAV Detection and Identification System Using

Machine Learning. Pervasive and Mobile Computing. Volume 82,

p. 101569

Mostafavi, S., Shafik, W., 2019. Fog Computing Architectures, Privacy and

Security Solutions. Journal of Communications Technology, Electronics and

Computer Science. Volume 24, pp. 1–14

Nørskov, S., Damholdt,

M.F., Ulhøi, J.P., Jensen, M.B., Mathiasen, M.K., Ess, C.M., Seibt, J., 2022. Employers' and Applicants' Fairness Perceptions

in Job Interviews: Using A Teleoperated Robot as Fair Proxy. Technological

Forecasting and Social Change, Volume 179, p. 121641

Sakir, R.K.A., Ramli,

M.R., Lee, J., Kim, D. 2020. UAV-assisted

Real-time Data Processing using Deep Q-Network for Industrial Internet of

Things. In: International Conference on Artificial Intelligence in

Information and Communication, pp. 208–211

Sarkar, D., Sheth, A.,

Ranganath, N., 2023. Social Benefit-Cost Analysis for Electric BRTS in

Ahmedabad. International Journal of Technology. Volume 14(1), pp. 54–64

Shafik,

W, Tufail, A., 2023. Energy Optimization Analysis on Internet of Things.

Advanced Technology for Smart Environment and Energy, In: IOP Conference

Series: Earth and Environmental Science, pp. 1–16.

Shafik, W. (2024). Blockchain-Based Internet of

Things (B-IoT): Challenges, Solutions, Opportunities, Open Research Questions,

and Future Trends. Blockchain-based Internet of Things, pp. 35–58.

Shafik, W., 2023. Cyber Security Perspectives

in Public Spaces: Drone Case Study. In: Handbook of Research on

Cybersecurity Risk in Contemporary Business Systems, pp. 79–97

Shafik, W., Matinkhah, M., Etemadinejad, P.,

Sanda, M.N., 2020a. Reinforcement Learning Rebirth, Techniques, Challenges, and

Resolutions. JOIV: International Journal on Informatics Visualization, Volume

4(3), pp. 127–135

Shafik, W., Matinkhah, M., Sanda, M.N., 2020b. Network

Resource Management Drives Machine Learning: A Survey And Future Research

Direction. Journal of Communications Technology, Electronics and

Computer Science, Volume 2020, pp. 1–15

Shafik, W., Matinkhah, S.M, Ghasemazadeh, M.,

2019. Fog-Mobile Edge Performance Evaluation and Analysis on Internet of

Things. Journal of Advance Research in Mobile Computing, Volume 1(3),

pp. 1–17

Shafik, W., Matinkhah, S.M., Afolabi, S.S.,

Sanda, M.N., 2020c. A 3-Dimensional Fast Machine Learning Algorithm for Mobile

Unmanned Aerial Vehicle Base Stations. International Journal of Advances in

Applied Sciences, Volume 10(1), pp. 28–38

Shafik, W., Matinkhah, S.M., Ghasemzadeh, M.,

2020d. Theoretical Understanding of Deep

Learning In Uav Biomedical Engineering Technologies Analysis. SN Computer

Science, Volume 1(6), p. 307

Shafik., W., Matinkhah, S. M., Asadi, M.,

Ahmadi, Z., Hadiyan, Z. 2020e. A Study

On Internet Of Things Performance Evaluation. Journal of Communications

Technology, Electronics and Computer Science, Volume 28, pp. 1–19

Song, H., Kim, M.,

Park, D., Shin, Y., Lee, J.G., 2022. Learning

From Noisy Labels With Deep Neural Networks: A Survey. IEEE

Transactions on Neural Networks and Learning Systems, Volume 34(11), pp.

8135–8153

Suwignjo, P., Yuniarto, M.N., Nugraha, Y.U.,

Desanti, A.F., Sidharta, I., Wiratno, S.E., Yuwono, T., 2023. Benefits of

Electric Motorcycle in Improving Personal Sustainable Economy: A View from

Indonesia Online Ride-Hailing Rider. International Journal of Technology,

Volume 14(1), pp. 38–53

Tetila, E.C., Machado, B.B., Menezes, G.K.,

Oliveira, A.D.S., Alvarez, M., Amorim, W.P., Belete, N.A.D.S., Silva, G.G.D.,

Pistori, H., 2020. Automatic Recognition of Soybean Leaf Diseases Using UAV

Images and Deep Convolutional Neural Networks. IEEE Geoscience and Remote

Sensing Letters. Volume 17(5), pp. 903–907

Walker, O., Vanegas, F., Gonzalez, F., Koenig,

S., 2020. Multi-UAV Target-Finding in Simulated Indoor Environments using Deep

Reinforcement Learning. In: 2020 IEEE Aerospace Conference, pp. 1–9

Wang, C., Wang, J.,

Wang, J., Zhang, X., 2020. Deep-Reinforcement-Learning-Based

Autonomous UAV Navigation with Sparse Rewards. IEEE Internet of Things

Journal, Volume 7(7), pp. 6180–6190

Wang,

Q., Zhang,

W., Liu, Y., Liu, Y., 2019. Multi-UAV

Dynamic Wireless Networking with Deep Reinforcement Learning. IEEE

Communications Letters, Volume 23(12), pp. 2243–2246

Wang, Y., Wang, J., Wong, V.W., You, X., 2022.

Effective Throughput Maximization of NOMA with Practical Modulations. IEEE

Journal on Selected Areas in Communications, Volume 40(4), pp. 1084–1100

Wu, F., Zhang, H., Wu,

J., Song, L., 2020. Cellular

UAV-To-Device Communications: Trajectory Design and Mode Selection by

Multi-Agent Deep Reinforcement Learning. IEEE Transactions on

Communications, Volume 68(7), pp. 4175–4189

Zhang,

Y., Zhuang,

Z., Gao, F., Wang, J., Han, Z., 2020. Multi-Agent

Deep Reinforcement Learning for Secure UAV Communications. In: IEEE

Wireless Communications and Networking Conference, Volume 2020, pp. 1–5

Zhou, S., Li, B., Ding, C., Lu, L., Ding, C., 2020.

An Efficient Deep Reinforcement Learning Framework for UAVs. In: 21st

International Symposium on Quality Electronic Design, pp. 323–328